37 Comments

In a previous blog, we described the outcomes of grant applications according to the initial peer review score. Some of you have wondered about the peer review scores of amended (“A1”) applications. More specifically, some of you have asked about amended applications getting worse scores than first applications; some of you have experienced amended applications not even being discussed after the first application received a priority score and percentile ranking.

To better understand what’s happening, we looked at 15,009 investigator-initiated R01 submissions: all initial submissions came to NIH in fiscal years 2014, 2015, or 2016, and all were followed by an amended (“A1”) application. Among the 15,009 initial applications, 11,635 (78%) were de novo (“Type 1”) applications, 8,303 (55%) had modular budgets, 2,668 (18%) had multiple PI’s, 3917 (26%) involved new investigators, 5,405 (36%) involved human subjects, and 9205 (60%) involved animal models.

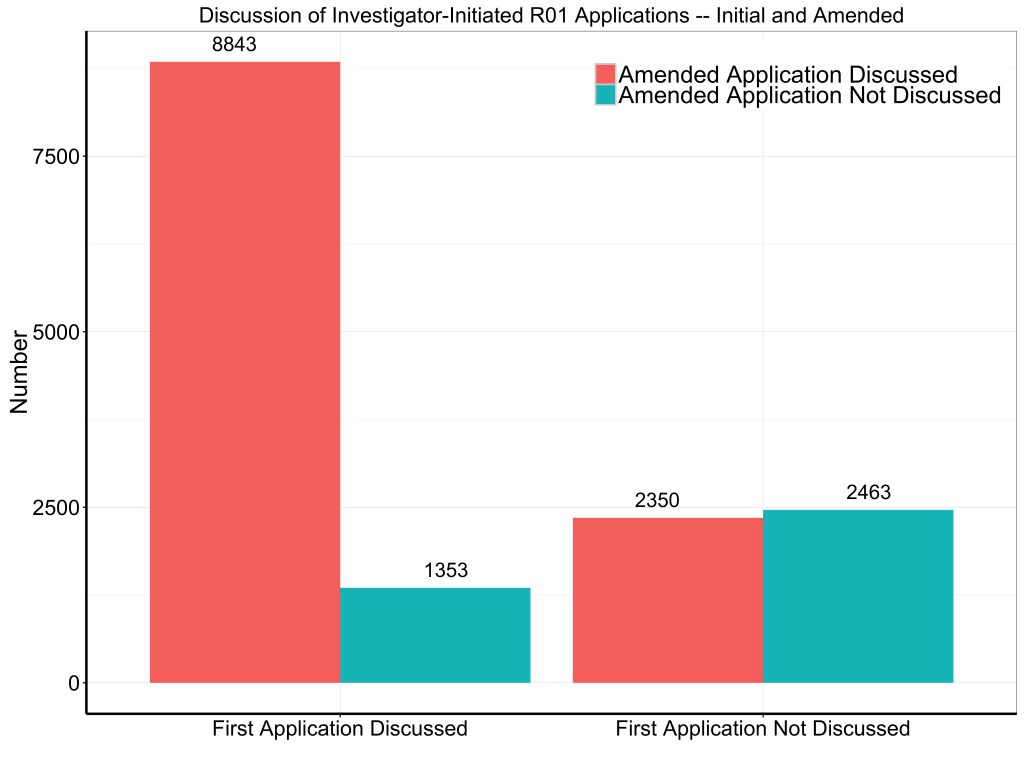

Now the review outcomes: among the 15,009 initial applications, 10,196 (68%) were discussed by the peer review study section. Figure 1 shows the likelihood that the amended application was discussed according to what happened to the initial application. For the 10,196 submissions where the initial application was discussed, 8,843 (87%) of the amended applications were discussed. In contrast, for the 4,813 submissions where the initial application was not discussed, only 2,350 (49%) of the amended applications were discussed.

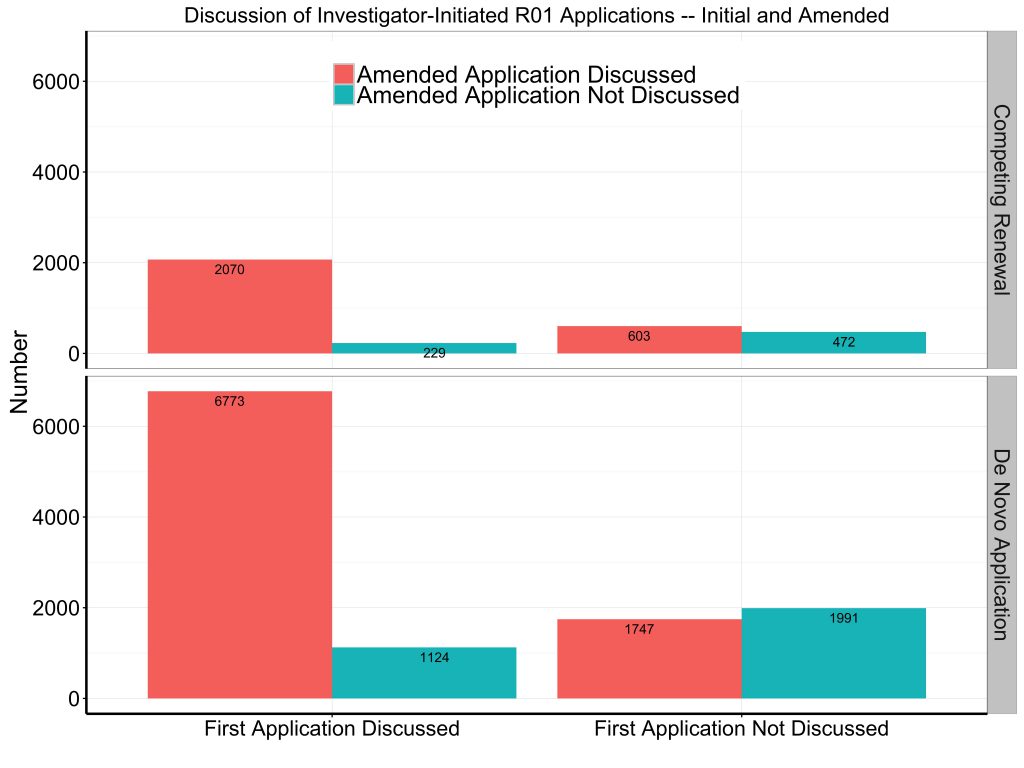

Figure 2 shows the same data, but broken down according to whether the submission was a de novo application (“Type 1”) or a competing renewal (“Type 2”). The patterns are similar.

Table 1 provides a breakdown of discussed amended applications, binned according to the impact score of the original application. Well over 90% of amended applications with impact scores of the original applications 39 or better were discussed.

Table 1:

| Impact Score Group | Amended Application Discussed | Amended Application Not Discussed | Total |

| 10-29 |

759 (97%) |

23 (3%) |

782 |

| 30-39 |

3,779 (94%) |

241 (6%) |

4,020 |

| 40-49 |

3,116 (84%) |

588 (16%) |

3,704 |

| 50 and over |

1,189 (70%) |

501 (30%) |

1,690 |

| Total |

8,843 (87%) |

1,353 (13%) |

10,196 |

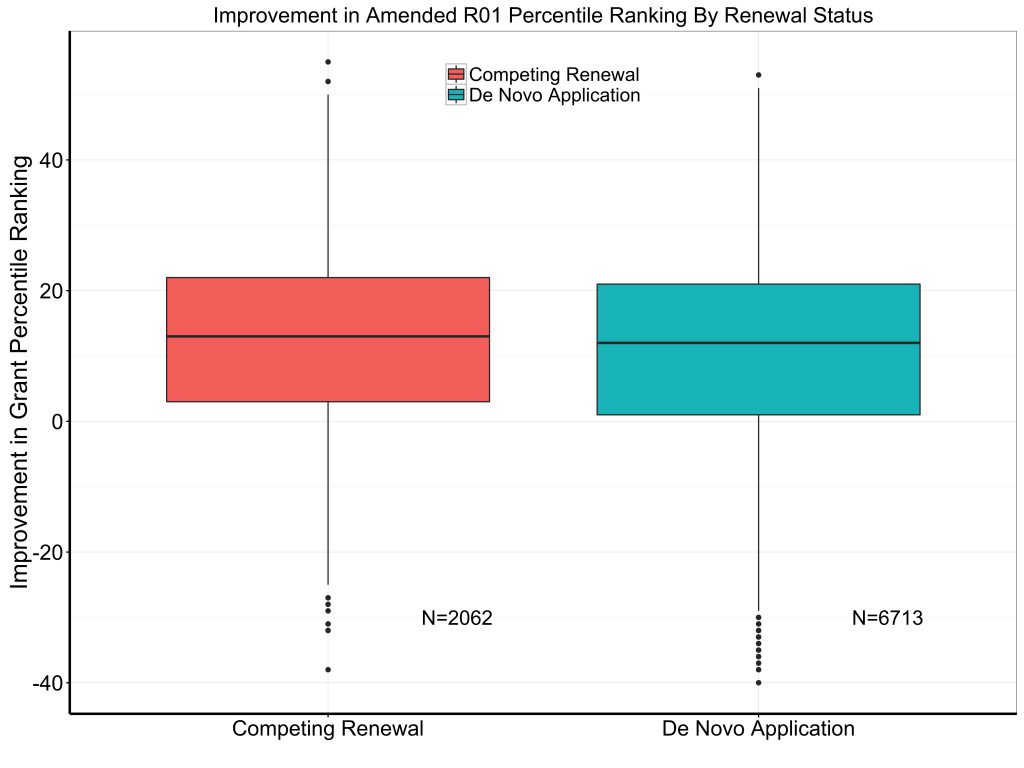

We’ll now shift focus to those submissions in which both the initial and amended applications were discussed and received a percentile ranking. Figure 3 shows box plots of the improvement of percentile ranking among de novo and competing renewal submissions. Note that a positive number means the amended application did better. Over 75% of amended applications received better scores the second time around.

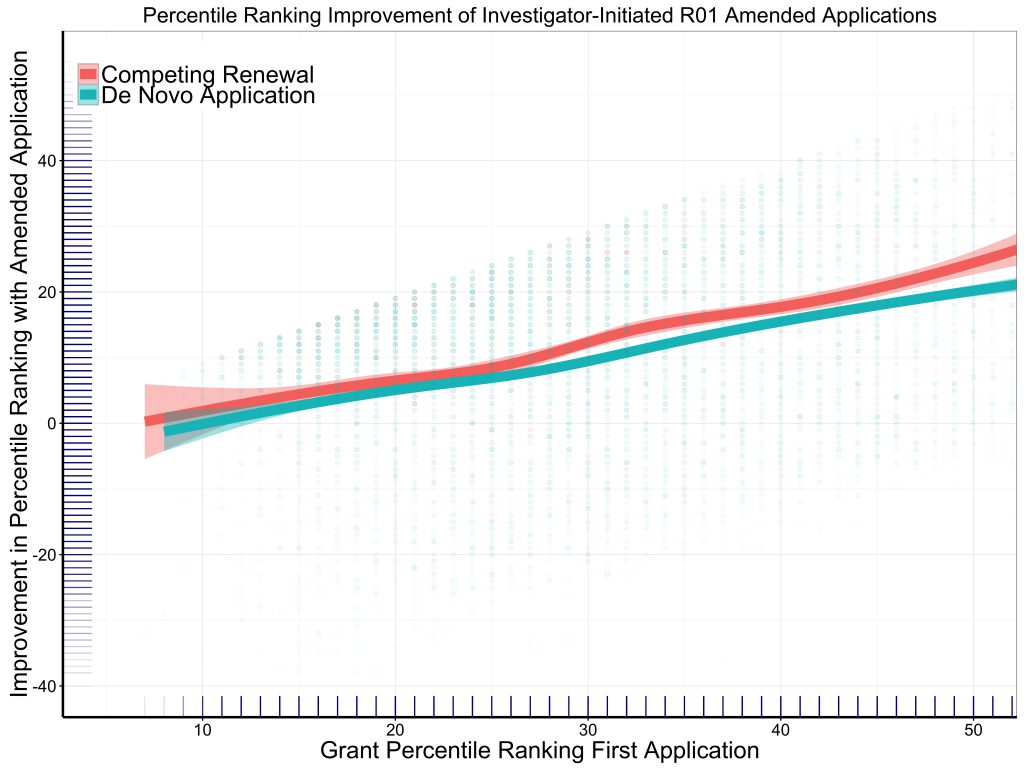

What are the correlates of the degree of improvement? In a random forest regression, the strongest predictors, by far, were the initial percentile ranking and the all other candidate predictors – de novo versus competing renewal, fiscal year, modular grant, human and/or animal study, multi-PI applications, and new investigator applications – were minor.

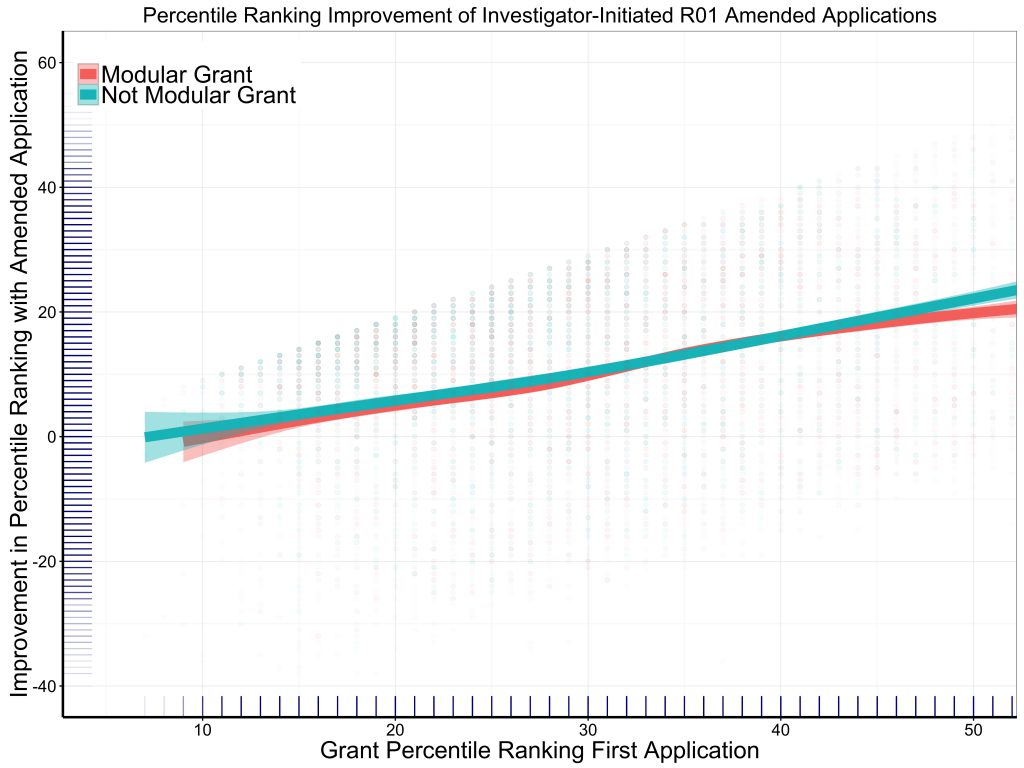

Figure 4 shows the association of percentile ranking improvement according to initial percentile ranking and broken out by de novo application versus competing renewal status. Not surprisingly, those applications with the highest (worst) initial percentile ranking improved the most – they had more room to move! Figure 5 shows similar data, except stratified by whether or not the initial application included a modular budget.

These findings suggest that there is something to the impression that amended applications do not necessarily fare better on peer review – but worse outcomes much more represent the exception than the norm. Close to 90% of applications that are discussed on the first go-round are discussed when amended. And for those applications that receive percentile rankings on both tries, it is more common for the percentile ranking to improve.

I am curious about the A1’s that don’t get discussed in the first round and then not discussed again. These are likely the ones where labs are dying/withering on the vine. Currently I have two of these (distinct R01 proposals that have both been triaged twice). What I am curious about is whether this is a productivity and/or preliminary data issue that basically becomes unresolvable because after the first review people are let go- and then there are not going to be new papers/new data coming in. Welcome to the associate professor hole….

Yep, that exactly describes my experience. At some point, you’ve invested energy into applications that aren’t going to get funded. Your “preliminary results” comprising the bulk of the “proposed research” are never going to get funded, and you have no resources to generate new “preliminary results.” Meanwhile, you spend all of your time on failed grant applications trying to tread water. I’ve finally woken up to the inevitability of the situation and taken a position outside academia. I expect many talented early mid career people to defect in the next 5-10 years if the funding agencies continue to ignore us.

NIH is totally dysfunctional!! What do you expect. The whole process for submitting a grant is a time wasted. The bureaucracy is a part of the NIH’s DNA. I think we need to think laterally for alternative solutions! Repeating the same thing and expecting a different results is a definition of stupidity!

I dare NIH to publish my comments!

My experience has been the same as yours. The most painful part is that when triaged the reviews are often not even helpful, so I have to guess what aspects they really didn’t like. Moreover, the reviewers have the audacity to complain that the PI has not been that productive recently. Well, duh! So far, I haven’t figured out how to generate a bunch of papers in new directions without any funding to do the work. I think I’ve been highly productive on a per dollar basis! Fortunately, I don’t have too long until I can retire, but a lot of younger people are or soon will be trapped in the same boat.

I am a New Investigator struggling with NIH. I had an A0 application that received a 29 percentile ranking, with high scores for innovation. I resubmitted the A1 at the next New Investigator deadline. This A1, which was better than the first one and included a new last author publication in a very good journal, dropped its percentile by 10. All the reviewers of the A1 application were clearly new, and didn’t care about comments of the A0 reviewers. Also, asking to people with experience, I was told to never again resubmit for the New Investigator deadline, as it creates the feeling that you didn’t take enough time to prepare. Doesn’t this defeat the purpose of the New Investigator deadline? Wouldn’t it be better to allow A2s for New Investigators?

Sorry to hear about your experience Emma….

I had similar but with a different twist… improvement between A0 and A1. Got as high as 20th percentile but not yet enough. Was encouraged to resubmit a new A0. By this time, had several strong publications and preliminary data. Second round A0 dropped by more than 20 points on the percentile ranking within the same study section. I really don’t know what make of it.

… On a different note, I have not found that designation as early stage or new investigators mean much in terms of funding success. But at least, one would hope that amended proposals to the same study section will be reviewed with some consideration of the prior history for that proposal.

I am hopeful Dr. Lauer or other experienced investigators can provide insight.

I disagree with the advice not to take advantage of the new investigator quick turnaround. It depends on the reviewer feedback from the initial submission – if you can truly address the critiques in a short turnaround, you should go for it. I had one R01 that went from scored to unscored on the A1 (after I thought we had addressed the critiques), but then my next R01, also resubmitted in the special new investigator turnaround was funded. (on a totally different topic from the other project). So I don’t think reviewers will necessarily have a negative attitude about the speed of resubmission – it more comes down to whether you have time to adequate address the suggested edits.

The fact that amended applications do not fare better upon re-review should be of great concern. Either one must argue that the majority of investigators fail to respond to the critiques in any substantive way (which seems unlikely for most) or that the reviewers are either not being consistent in their scoring (and of course you can get totally different reviewers) or there is a failure to adequately communicate the issues the reviewers most want addressed. Either way, it seems problematic, and certainly disheartening for investigators with solid, but maybe not fundable scores on the first go-round.

I think it is important to have an open peer review, where the reviewer name should be published along with the comments. It is very easy to hide behind anonymity and provide poor comments that are scientifically not correct. Also there is no scoring for reviewers, you could be a poor reviewer and still continue on the meeting roster.

While the statistics are rigorous, an extremely important issue appears to be ignored in this analysis- the continuity of the review process, i.e., that at least the majority of the previous reviewers of the proposal will also review the amended proposal, or if for the amended proposal there are newly assigned reviewers- those should receive clear instructions and follow certain guidelines re maintaining the continuity of the review process.

It will provide important information to statistically test a hypothesis that A1 applications that fared worse did not have have higher incidence of new reviewers. Currently, the assumption is that worse outcome is due to new reviewers reviewing A1s.

Is there any way to express this as likelihood of being funded (or likelihood of getting to, say, 20% or higher)? These data would be a great way to help investigators decide what the odds of an application being rescuable conditional on the initial score – yes, it’s not an absolute but a decent estimate of probability of success would help people put their applications into perspective. Operationally, it would cut down on the number of A1s with no realistic chance of getting funded being submitted and make life easier for both CSR and study sections.

Just curious, of the A1s that do get discussed, how many get funded? That to me is the critical question.

I am more curious about the applications that got scored and then not discussed. While the data you present show this as a low percentage, it does not address how one can revise an application and answer the critiques without changing what was viewed as meritorious and yet have a significantly worse outcome. Not all revisions will move an A1 to funding, but it should not be possible for it to become unworthy of discussion. If it is due to reviewer change then there is a bigger problem in peer review, putting all investigators at risk for the whims of who reviews the work rather than relying on the scientific merit.

Well, that is exactly what is happening. The proposal goes to a different set of reviewers, who don’t respect the previous set of reviews and come in with their own set of biases. All it takes is one such reviewer to trash your application and put it into the “not discussed” pile. At least with papers, you are dealing with the same set of reviewers, and the process is more fair. With papers, if you get one biased or misinformed reviewer, the editor will go out for another opinion. With grants, and particularly if you are doing truly innovative research that is outside the norms of your field, it is pretty much guaranteed that you will get one of three reviewers to destroy your application every single time. The real innovators can actually go out and get jobs outside of academia, which is what I’ve recently done. I am not going to spend my career spinning my wheels and accomplishing nothing simply because they won’t fix a broken system quickly enough.

I am an entrepreneur with access to SBIR. Several issues

1. I suggest same review panel for A1 application resubmissions since the new set of reviewers have no respect for I set of reviewers. The guidelines for the second set of reviewers could be to look at how much percentage of questions are answered properly. It is impossible to digest from good score to ‘Not Discussed” level.

2. I think reviewers should get paid so that they are liable for the bad job if they do. We have a proof that some of the reviewers might not have even read the proposal fully. How do you account for such ethics? I am a reviewer and we need to be accountable.

3. Overlap of academics with entrepreneurs: Academic people have a laxury to generate data from the other grants to put it into SBIR. Where do the small companies go? Most likely from 401 K from the PI!!!

4. Majority of SBIR proposals are reviewed by Academic people. With great respect to their service, it is difficult for them to evaluate productibility issues and rely more on mechanistic issues. It requires enormous resources to elucidate mechanism of drugs, despite limited light can be shed on mechanism.

5. Induction of industry experts into the panel. It wont happen unless you pay reviewers.

6. Help entrepreneurs to know what perfect score should looks like by posting after PI permission

As a young investigator, I am curious about these types of opportunities outside of academia. How did you find this opportunity?

Not that I disagree with much of your point Carol, but a matter of procedure should be clarified: one reviewer cannot doom your proposal to the “not discussed” pile, rather any one reviewer can request that it be discussed…it takes agreement among the reviewers (and indeed, in most cases a lack of objection from the rest of the SS or SEP) to triage an app.

“With grants, and particularly if you are doing truly innovative research that is outside the norms of your field, it is pretty much guaranteed that you will get one of three reviewers to destroy your application every single time”. Carol this is so true!

I believe it would be more interesting to break down these results by the same categories presented under the first paragraph “Among the 15,009 initial applications, 11,635 (78%) were de novo (“Type 1”) applications, 8,303 (55%) had modular budgets, 2,668 (18%) had multiple PI’s, 3917 (26%) involved new investigators, 5,405 (36%) involved human subjects, and 9205 (60%) involved animal models.” Your analysis assumes that all Institutes are the same and that all types of research are receiving the same treatment. At the very least I would like to see the results based on the categories above (Do the overall results hold for all of them?) and more importantly I would like to see a break down at least by Institute. Finally, I am just assuming all differences presented are statistically significant since you did not present any of those results, although some of the plots and graphs look very close to be not significantly different, but just an effect of the large sample size. Either way thanks for taking a look at these issues, and it could be very well that those experiencing these adverse outcomes are also more likely to share it with colleagues, which then leads to the overall perception of something going on.

I think that grant proposals that score above a 30% and come back as an amended application should be guaranteed the right of review by the original reviewers (unless the applicant requests otherwise). The original reviewers obviously saw merit in the application but had a few “suggestions” to make the proposal better. Having the same set of reviewers would allow the applicant a fair chance to improve the proposal. We do this with manuscript reviews- it seems unfair not to do this with grant reviews. I realize that a specific reviewer might not be at the study section when the revised grant comes in. However, it would not be difficult to have that reviewer do a “call in” review of the grant. As we all know, very few of the grants we are assigned to review for a particular study section would make the cut of being above a 30% but not funded. It would not be an undue burden to ask a reviewer to read one or two revised proposals even if they are not participating in the subsequent study section. There is nothing more discouraging than having addressed all previous concerns but then receiving a poor review by a new reviewer. This simple solution could go a long way towards making us all feel that the NIH review process was fair.

This is an excellent suggestion and I think it would make me more inclined to send proposals to NIH in the future. I have recently had an amended proposal receive a much lower score than the original, based on a statement by one reviewer that claimed erroneously that our proposed work had already largely been done. He/she cited no evidence for this claim and was clearly not one of the reviewers of the original proposal who gave it a good score that barely missed the funding cut-off the first time around. Nothing is more discouraging than to have one misinformed reviewer pan an amended proposal when the original proposal received high scores and encouraging comments.

Anything less than strict adherence by A1 reviewers to the comments made by reviewers on the initial submission is a waste of everyone’s time and resources and makes the entire review process look capricious and irrational. If necessary, reviewers should commit to being available for a second review for a reasonable period of time after they have rotated out of study section. And ALL reviewers should stick to judging only whether the initial comments were addressed – period.

As for anonymous review, it is an unnecessary evil. Transparency would be very beneficial to the review process.

I’m going to guess that you have never sat on the review side…essentially I would have to disagree with everything you said. Reviewers do not spend a lot of time reading the grants they review (this is a necessary evil of the reality of the situation), and fairly often reviewers do not “hit the mark” as it were. It is well within the prerogative of the applicant to argue his point in the responses to reviewer comments (politely of course)…he/she is an expert on the topic after all. If the applicant blindly goes forward with changes and addresses critiques that are off the mark, the result is almost invariably rejection by a “new” set of reviewers…this point is also important; in general it is most helpful to have at least one prior reviewer and at least one new reviewer, providing a reflection on degree of improvement (with regard to prior reviews) and a fresh look at the excellence of the application–the end goal, after all, is not to reward someone for doing what they’re told, but rather to achieve an application that is the closest it can be to a “perfect version of what it could or should be”. This is why the notion that “ALL reviewers should stick to judging only whether the initial comments were addressed” is inherently wrong, and would only be a further disservice upon a poor initial (which we all know happens)–two wrongs don’t make a right after all…unfortunately, the best fix for the discrepancy between review sessions is to allow A2 submissions, which used to be the norm and allowed for the equilibrium to shift towards the sanest reviews.

Finally, anonymity is the only mechanism to ensure honesty in reviewing…numerous studies have demonstrated that reviews of any kind are tailored to causes when anonymity is not ensured.

As I was the one who raised the issue of maintaining the continuity of the review process (further elaborated by others with pretty productive ideas)- when we are asked to review manuscripts for peer reviewed journals, the default in most of the cases is that the manuscript requires a revision. Almost all peer reviewed journals would then approach the original reviewers and ask them to re-review the revised manuscript (personally I always consider this to be my duty and responsibility to agree to review the revision- for exactly the same reason of maintaining the continuity of the review process).

If there is a practical solution to the A1 conundrum, it is the understanding that if you have served as a reviewer of a certain proposal you will agree within reasonable limits and circumstances to also serve as the amended application reviewer (or, as I suggested in my initial comment- to impose specific guidelines on the new reviewers of the amended applications to closely follow the original critique and address in their review whether the issues raised by the previous reviewers were addressed and adjust their scoring accordingly).

I regularly serve as a reviewer in various study sections and do it for many years now. Unfortunately, from my pretty extensive experience of such rarely am I being asked to re-review proposals that I had reviewed in their first submission.

Implementing this may be a bit more problematic for de novo applications that are reincarnation of previous failed proposals. In many cases those are intentionally submitted as such in order to circumvent what may appear as a bias by the previous reviewers.

I think these fine points kind of interesting, but mostly red herrings. The real problems are (1) the PI : NIH budget ratio and (2) the glacial turn-around.

Vast amounts of stress could be reduced (and research programs could be much more rationally planned) by shortening the time between submission, scoring, and “final answer”. Why has that not changed from the days when grants were submitted on actual paper by snail-mail?

Not discussed or high score of an R01 does not mean that the application is bad. It means a faulty ‘number game’ which is designed to benefit some people having friends sitting the study sections. For example, if you have friends who wish to pull out your grant or the reviewer who sees potential for getting help in future from the applicant, only then they give you 1 or 2 so that it can pass the firs hurdle and can be put for discussion. Otherwise, your application will get scored of 3, 4, ..7 in the scoring sections (Significance, Innovation, Investigators, Approach). The applications with these scores will be Triaged (by one or two reviewers) before even anyone will know what you have proposed. Science matters only a little bit for NIH R01 evaluation if applicant is lucky. Those reviewers who play these numbers and intentionally kill your grant have never been punished and will continue to serve in the Study Sections after destroying your career that fully depends on R01. Your “A1” will not be scored again even if it deserves a fresh look. Dr. Michael Lauer may sit in his office to evaluate percentage of scored and unscored applications, and will innocently give you some number facts once more without rectifying the real problems !!!

I cannot agree more on that the current A1 review is a flawed process, and A1 submission should be reviewed by the same reviewers of the original submission. My current situation is a good evidence. My 1st submission scored very well by all 3 reviewers. The A1 submission was just reviewed in March. The study section stated in the A1 Summary Statement, “previous critiques are adequately addressed”. The A1 review, however, completely dismissed the results of the 1st round review. It seems to me that it would be sensible to have the same reviewers for both round of submission, otherwise, A1 submission is mostly meaningless based on this analysis on A1 Submission Outcomes here.

There is great concern over reviewer inconsistency (i.e. changing reviewers between A0 and A1). Of the 25% of A1 proposals whose scores are worse than the A0, it would be very informative to see the data presented so as to demonstrate what percentage of those had 1, 2, or 3 of the reviewers that had reviewed the A0 application. That data could certainly be obtained and shown alongside the data presented here. I have a hard time believing that 25% of A1 applications that were previously scored would simply ignore the previous review recommendations. Having reviewed many grants, I know this is very seldom the case.

I do like the idea that all discussed A0 applications should automatically have their A1 application discussed, though whether it would be overly burdensome for the panel or not may depend on the proportion of a panel’s applications that are typically A1 vs. A0. Too high a proportion would be prohibitive logistically. Perhaps (and I don’t know if this is a logistical reality either) only A1 reviewers who were also reviewed the A0 application should decide if the A1 application should be discussed or not. This would still give a new reviewer the chance to point out (based on a different expertise) flaws that the earlier panel did not recognize previously, but still ultimately leaves the decision of the value of those new criticisms to fall on the full panel, rather than having such comments kill the application with a high initial impact score that would throw it into the not discussed group.

I currently have a small RO1 that is running out in a year. So, I submitted a new R01 on a new project (but within the field). I recieved 3 very different reviews for the new R01 submission. The first reviewer wrote like a senior scientist and provided many pages of critiques. Unfortunately, the reviewer was simply not up-to-date and hung up on old dogma in the field. He/she was very assertive on the critiques even though they are not substantiated and wrong. The third reviewer just dismissed a key aim of the proposal, an aim that both reviewer 1 & 2 liked the most. The grant application was triaged and the entire process was a horrible disaster! Who are those reviewers!!

NIH needs to make a strict rule for reviewers to read comments made by reviewers on the initial submission, otherwise the review process is a waste of everyone’s time and resources and makes the entire review process failure. In addition NIH must not allow reviewers to exchange or post their comments and decisions during the review period. Why not grant review can be similar to manuscripts review in peer reviewed journals. We know that reviewer do not see comments or decision from other reviewers during manuscript review. Applications that was scored and then not discussed can be explained by reviewers sharing comments during the review process and they did not read comments and scores from the earlier round.

Dear Mike Lauer:

Thank you for this very insightful publication. It is very informative for extramural investigators at all levels who may need some level of reassurance that NIH funding is not totally a stochastic process. The trending in expected direction of A1 applications at least allows investigators to infer that the peer-review system in place is indeed rationale – even if imperfect.

However, it does not cover a scenario that I suspect is not rare in the NIH grant writing experience of extramural investigators. Specifically, I am referring to the outcome of the set of grants submitted as a second round A0 (equivalent to prior A2 submissions) that have been through a prior A0 and A1 submissions. Let us assume that the proposal was scored in both A0 and A1 submissions and as stated in this review, scores improved between initial A0 and A1 submissions but didn’t meet the payline. I suspect that a high proportion of such investigators choose to resubmit a fresh A0 application incorporating comments to the A0 and A1 to same study section where their proposal has been discussed in the past.

I will be most grateful if you will provide insights regarding the following questions:

1) How common is it for a proposal that trended positively as noted above to show up in a round two A0 proposal to the same study section and then score worse than the initial A0 proposal?

2) I have also heard of anecdotal reports of some of these submissions being unscored in a second round A0 submission. How likely is this to occur?

3) What does the fate of initially promising second round A0 – which will correspond to formerly A2, submissions say about reliability of the peer-review process at NIH?

I will be very grateful for any insights from the NIH and community of researchers.

Appreciatively,

An Assistant Professor in a US university.

The most useful result of this analysis is the observation that a rewritten de novo application typically only improves in score by 10~15 percentage points. That suggests that unless the original application scored pretty well, probably in the 25-30 range, then it is unlikely that resubmitting will be successful.

Keep publishing all the charts and graphs – but that doesn’t help with the concrete issues that are being raised: the associate prof black hole and the fact that extra scrutiny should be afforded to scored A0s that are subsequently not discussed – it’s probably somebody’s job to look at these cases and make sure it’s not due to the problems mentioned above. At least the charts make the point that it wouldn’t be too hard of a job to do this, since it’s the minority of grant applications!

I think the results of the analysis were presented in an unbalanced–and somewhat defensive–manner. 25% of applications that were discussed and scored on the initial submission received lower scores on the resubmission. Rather than report that the majority improve (as one would expect they would), the real story is that 1 our of every 4 resubmissions does WORSE after responding to reviewer comments. It’s certainly possible to make a resubmission worse, but this is way too high.

As noted by a commenter above, this is very demoralizing, and makes the review process look capricious and irrational. In my experience, the points raised that result in a worse score on the resubmission may have not even been mentioned by reviewers of the initial submission, and so would never have been addressed. In my view, this is one of the most broken aspects of the NIH review process. We’ve all come to accept that we’re unlikely to get funded on the initial submission, but to have a 25% of actually doing worse on the resubmission is not acceptable. It’s probably not possible but it would be instructive to see an analysis that compared outcomes when the same vs. different reviewers handle the initial submission and resubmission. That seems to be the working hypothesis of many of us on this thread.

I think lot of the poor outcome of grant reviews is a matter of incompetent members of IRG. Even if they make poor comments there is no punishment for it. I think it is very important to have a evaluation matrix for the reviewers. It should be an open process, reviewers knows whose application it is. The comments by the reviewers and there name should be published in a database accessible to scientific community.