16 Comments

As described on our grants website, the R21 activity code “is intended to encourage exploratory/developmental research by providing support for the early and conceptual stages of project development.” NIH seeks applications for “exploratory, novel studies that break new ground,” for “high-risk, high-reward studies,” and for projects that are distinct from those that would be funded by the traditional R01. R21 grants are short duration (project period for up to 2 years) and lower in budget than most R01s (combined budget over two years cannot exceed $275,000 in direct costs). NIH institutes and centers (ICs) approach the R21 mechanism in variable ways: 18 ICs accept investigator-initiated R21 applications in response to the parent R21 funding opportunity, while 7 ICs only accept R21 applications in response to specific funding opportunity announcements. As mentioned in a 2015 Rock Talk blog, we at NIH are interested in trends in R01s in comparison to other research project grants, so today I’d like to continue and expand on looking at R01 and R21 trends across NIH’s extramural research program.

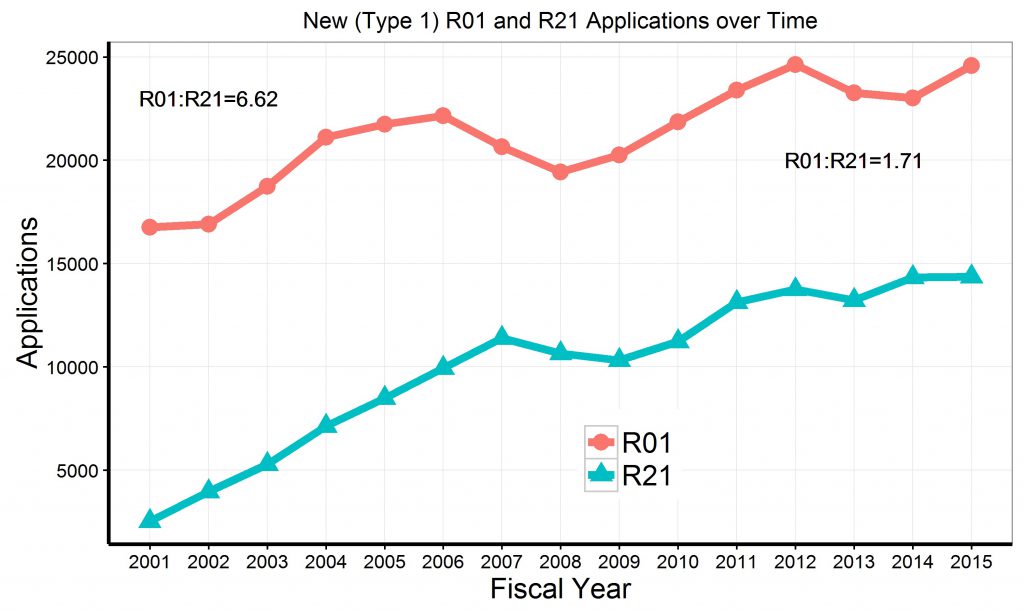

Figure 1 shows trends in R21 and R01 de novo (“Type 1”) applications since 2001. Numbers of applications have increased substantially for both activity codes, but the rate of growth has been much greater for R21s. In 2001, NIH received six R01 applications for every R21 application received; while in 2015 the ratio of R01 applications to R21 applications less than 2. In using the new investigator policy definition to identify new investigators, we see that, on average across the last five fiscal years, approximately 35% of R01 applications, and 50% of R21 applications, are submitted by new investigators. In case of awards, 35% of R01 awards and 34% of R21 awards are made to new investigators.

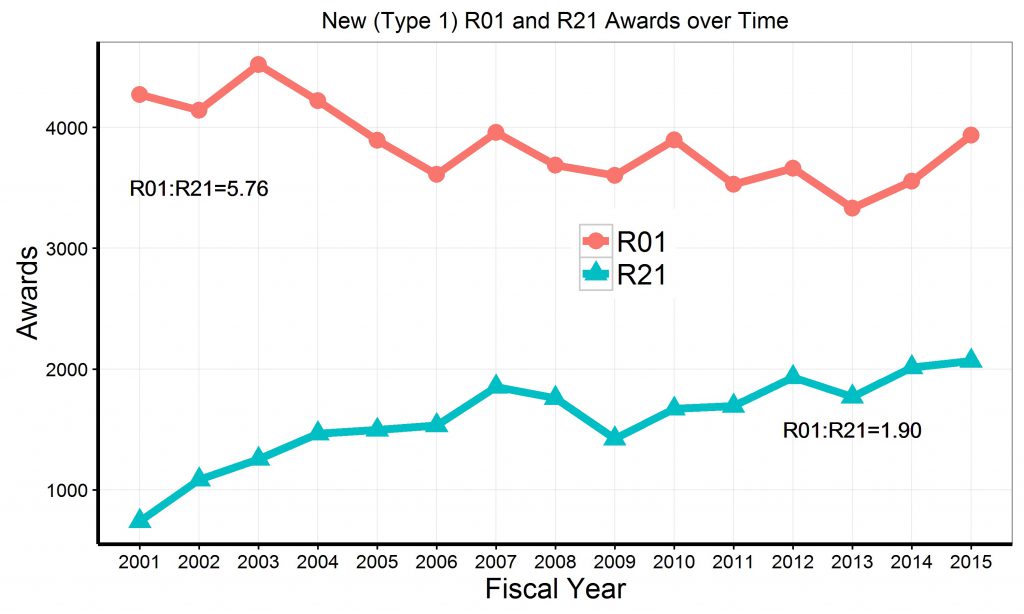

Figure 2 shows trends in de novo (“Type 1”) R21 and R01 awards since 2001. Over time, the number of R01 awards has gradually declined, while the number of R21 awards has substantially increased.

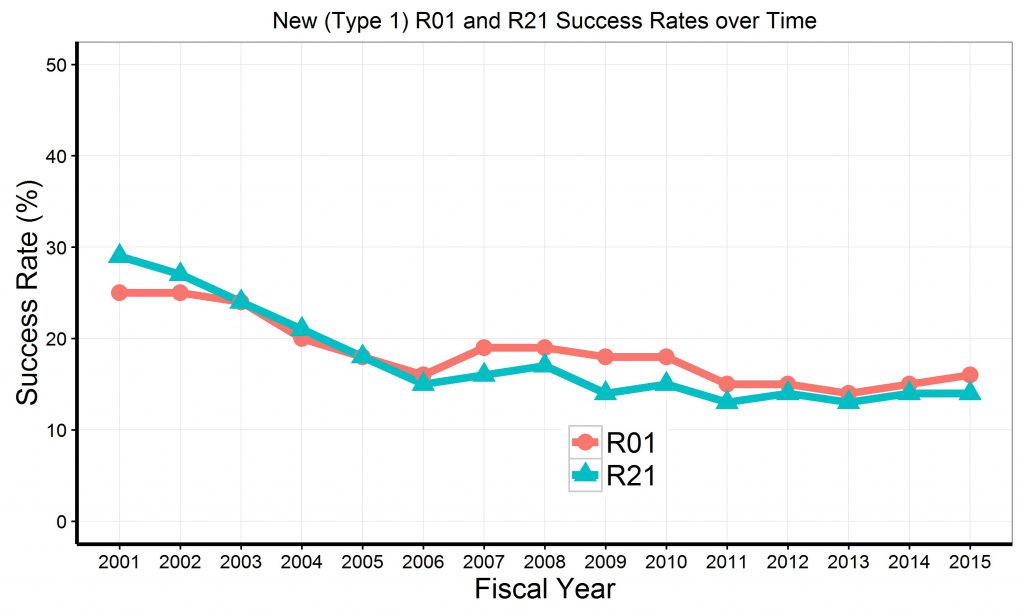

Figure 3 shows trends for success rates for de novo (“Type 1”) R21 and R01 applications. While in 2001 the success rates for R21 applications were somewhat higher, over time R21 application success rates have been either equivalent or less than R01 application success rates. In FY2015, the success rates for Type 1 R21 applications were only 14%, compared to 16% for Type 1 R01 applications.

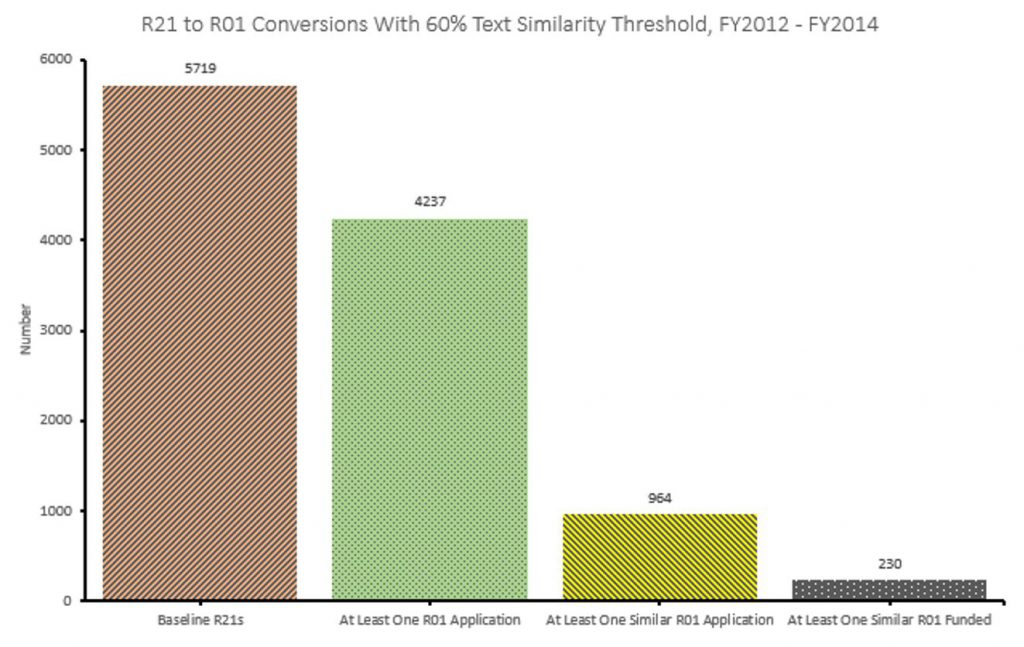

Since R21 projects are intended to be exploratory, we used text-mining software to see how often R21 awardees subsequently submitted related R01 applications. We used a text matching algorithm similar to what we used for our previous analyses of “virtual A2 applications.” For R21 awards that were active in fiscal years 2012-2014, we looked for subsequent R01 applications that were at least 60% textually similar

Figure 4 shows our findings. For 5,719 unique R21 awards, there were 4,237 R21 awards (74%) that were followed by least one application from the same PI, but there were only 964 R21 awards (17%) which were followed by at least one subsequent similar R01 application. Correspondingly, for the 5,719 unique R21 awards, there were 230 R21 awards (4%) which were followed by at least one similar funded R01 project.

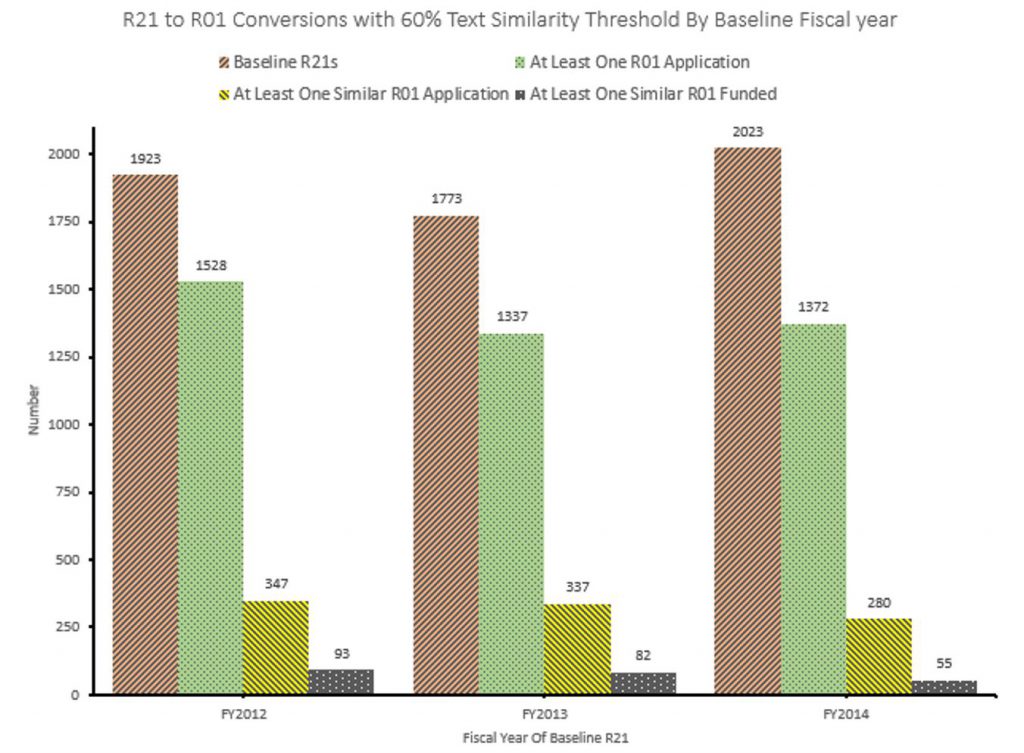

Figure 5 shows the same data except broken down by the Fiscal Year in which the baseline R21 was funded.

As a quality check, we pulled out a 15% sample of R21-R01 application pairs in which our text-mining software indicated at least a 60% similarity. Our subject matter experts found that in over 80% of cases, there was a plausible relationship between the two projects.

Putting these findings together, we see that:

- The R21 mechanism is increasingly popular – we are seeing many more applications and awards, with growth rates exceeding those of R01 grants – but also highly competitive.

- Most R21 applicants and awardees have previously received some NIH funding; only 34% of R21 awardees were new NIH investigators.

- Over 15% of R21 awards are followed by at least one similar R01 application, but fewer than 5% of R21 awards are followed by at least one similar funded R01 project.

I am grateful to my colleagues in the OER Statistical and Analysis Reporting Branch (SARB), in the OER Research Condition and Disease Category (RCDC) Branch, and the OER Office of Extramural Programs (OEP) for assistance with these analyses. I also thank Dr. Rebekah Rasooly, program director at the National Institute of Diabetes and Digestive and Kidney Disease; Dr. Sherry Mills, chair of a trans-NIH committee examining the R21 at NIH; and the R21 committee members.

Interesting! Fewer than 5% of R21 awards are followed by at least one similar funded R01 project.. Do you thing this is because the subsequent similar R01 will be struggling with being lack of novelty?

I also find this an interesting statistic. Those who think an R21 is a stepping stone to an R01 likely need to rethink their grant application strategy.

Can you break it down by successful vs unsuccessful intervals of R21 support? Based on paper attributions? Wouldn’t be perfect but would start to address high risk / high (any?) reward ratio.

These are disturbing results in many ways. Many young investigators starting their careers are being advised by department chairs and others who have “decision-making authority” over the young investigators’ careers to start with an R21 application. In light of these findings that would appear to be a career stopper rather than a career starter. It would be excellent if department chairs/ administrators/ senior advisors/ mentors of young investigators were made aware of the severe disproportional progress from R21 to R01 funding. A second comment. I think it would help us further understand the impact of the increased R21 applications and funding in the past 15 years if we could see how reducing the number of funded R21 proposals (perhaps in 10% increments) would impact the number of funded R01 proposals (assuming that all dollars saved from funding fewer R21s would go into funding R01s, and using the “average” R01 funding budget). There obviously won’t be a 1:1 trade-off but I will hazard to guess that the impact on R01 funding will not be small – somewhere around one additional R01 funded for every 2.5 fewer R21s not funded. Until now I was disappointed that some institutes did not support the parent R21 mechanism, but now I am glad they do not.

If I am reading this data correctly, after a funded R21 the key numbers are the R01 applications that were 60% similar to the funded R21s. Those key numbers are the similar applications which were funded and applied for (the grey and ochre bars). If you look at the success rate of those (FY12 93/347, FY13 82/337, FY14 55/280) you end up with success rates of 26.8%, 24.3% and 19.6%. This suggests that a prior R21 application that results in a similar R01 application has a better success rate than an R01 that was not preceded by a funded R21.

Of course only about 13-18% of these R21s resulted in an R01 application so it also shows that R21s don’t often translate into an R01 application at all. Perhaps because they were high risk and unsuccessful, or research direction focus changed, etc. Many valid reasons.

My personal experience mirrors the data, i.e. we received an R21 to perform a high throughput screen for molecules that would have allowed a somewhat different approach to our target disease. In that evaluation one reviewer commented that this was an ideal R21. We had a small number of hits and demonstrated that our screen was predictive of our lead molecule having a similar effect in vivo. We then submitted an R01 to examine mechanism and in vivo therapeutic/toxic effects of our lead compound in the animal model, which seemed to me to be exactly how R21’s were supposed to work, and the new review panel did not seem to care that we had done exactly what we proposed to do in the R21. This experience bears out that NIH administrative policy has very little effect when grant reviewers are free to express their own biases.

I think the issue is the 60% text similarity. We have no idea whether that’s a good measure in picking up R01 grants derived from R21 applications. The OP indicates most of the related grants this approach picks up are reasonable, but one can’t know how many R01 grants it misses.

What I think one can say is that for the R21 to R01 conversions this approach does capture, about 1/4 of those R01 grants are successful. Not sure how that compares to R01 success rates on the whole. One would need to know that number to understand whether these R21 -> R01 grants are doing better than average.

From my own perspective, I see two trends that both place a higher demand on R21 applications (or similar funding) and also make the success of these applications decreasingly likely:

1. Less funding is available for starting new projects. Prior sources such as institutional funds, state government funds, or foundation grants, have become more competitive and more restricted to specific research areas. NIH-funded centers and program projects used to also be a reliable source of funds to initiate new projects. As these sources have disappeared, individual investigators are increasingly forced to turn to the R21 mechanism.

2. Study sections have become increasingly critical of R21 grants. While the R21 mechanism was originally designed to support innovative or transformational ideas with little or no preliminary data, in reality most study sections require a large amount of preliminary data, in addition to established and productive collaborations and prior publications in the new research area. While the NIH seems to want to provide a funding mechanism for initiating exciting new projects, the members of study sections, all of whom have one or more R01 grants, see the R21 mechanism as a waste of time and money, and the general attitude is that none of these proposals is worth funding.

The solution I suggest is to move all R21 review to special study sections that do not review R01 or P01 grants. In this way, the comparison and scoring would be amongst similar quality grants. Perhaps more difficult to enforce, but another solution would be to bar R21 grants from presenting preliminary data, and thus the grant would be judged solely on innovation and transformative potential.

I would check your data-mining software method for 60% R21similarity/identity against a subset where you actually ask the PIthe question: “Is the temporally subsequent R01 substantially and directly related to the R21 work?” Just in case.

That said, R21s are essential portals to new innovations, gviven the conservative stance of R01 review committees, yet are unfortunately viewed skeptically by some institutes/programs such as NCI.

Overall, funding rates on both R21s and R01s are simply too low to support the solid national biomedical research infrastructure stated by NIH as a goal. Advocacy for the NIH mission takes on new urgency, given the incoming administration’s stated goals.

R21s don’t require preliminary data? That’s a joke. And the people who should be running the study sections to eliminate this requirement are not doing their job. Barring the R21s from having any preliminary data or publications relative to the proposal whatsoever is a great idea.

This is all interesting, but I would like to see other types of grants included in this analysis. I am frequently told here that R01s are a thing of the past, and that NIH is moving more and more toward large focused grants and RFAs. This is being sold locally as dictating the kinds of scientists and programs that we should be focused on recruiting and building. Thus, it is not just R21 versus R01, but R grants competing with others. I would be interested both in seeing the numbers as well as any thought behind such trends.

Multiple replies have brought up the significant issue that study sections require large amounts of preliminary data for an R21. Some have proposed intriguing solutions. Why is there no response from Mike Lauer or another NIH individual suggesting that they are aware of the issue and working on it, or that they don’t care about the issue and we can just forget about it. What is the point of this comment forum if we are just airing ourselves to each other?

We do appreciate and read the thoughtful feedback in the comments, which help us as we engage in our internal policy discussions. The data shared in this post is part of ongoing analyses to look at the R21 and R01 in the context of all activity codes used to support extramural research. We are aware of the concerns regarding preliminary data and yes, we are actively discussing how to address these issues.

Hi,

I believe sufficient time has passed since NIH showed the concern and commitment. I got my R21 recently reviewed. I do not see any change in practice between 2017 and 2023. Reviewers still ask for more data and to propose multiple experiments. Scores still vary among reviewers (1 and 6). I believe NIH should use some strength, like NSF, to control the review process.

It is because the Reviewer will view the R01 follow up as not novel! I was at a study section where a reviewer said, “the PI had an R03 on the same idea, so this is not new…” The NIH need to educate their reviewers on the different mechanism. The SRO should jump in when application discussion goes in the “not novel” direction.

The most important take-away from the opinions above seems to me: please let researchers with exciting new ideas as proven by successfully funded AND executed R21s or similar, do their job and take that idea to the next level with an R01, so that biomedical and other real-life advances may result down the road.