23 Comments

A few months ago, a researcher told me about his experiences with the relatively new NIH policy by which investigators are allowed to submit what we have come to call “virtual A2s.”

Under NIH’s previous single resubmission policy, if an investigator’s de novo R01 grant application (called an “A0”) was not funded, they had one chance to submit a revision (called an “A1”). If the A1 application was unsuccessful, the applicant was required to make significant changes in the application compared to the previous submissions. NIH took measures to turn away subsequent submissions that were materially similar to the unfunded A1. Under NIH’s current policy, investigators may resubmit a materially similar application as a new submission after the A1 submission. We will call these applications “virtual A2s.” The researcher told me that his virtual A2 did not fare well; although his A0 and A1 had received good scores (though not good enough for funding), the virtual A2 was not discussed. He wondered, just how likely is it for a virtual A2 to be successful?

Because we treat virtual A2s as de novo submissions, we do not link the application to previous versions; therefore, this was not a simple question to answer. However, we have taken advantage of text-mining software to identify virtual A2s and to describe their review outcomes compared to other submissions from the same investigators.

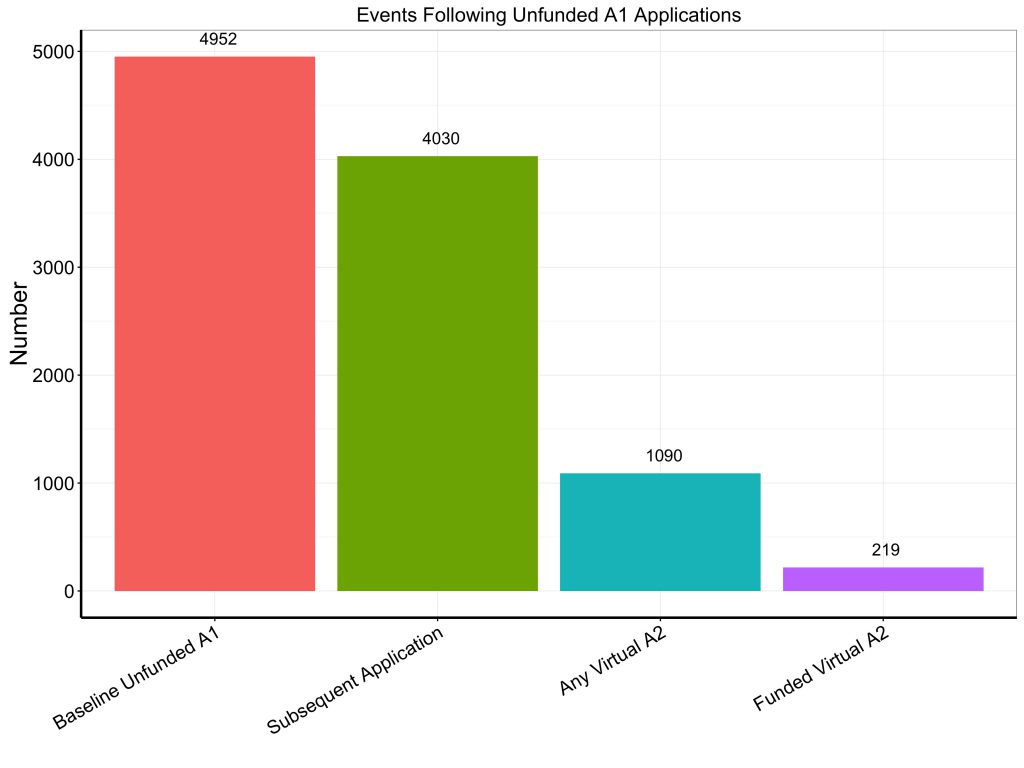

We begin with 4,952 unique R01 A1 applications that were considered for funding in fiscal year (FY) 2014 and which were not funded. These 4,952 applications had been submitted by 5,660 unique applicants (about 18% of the applications named multiple principal investigators (PIs)). We then looked through the middle of FY 2016 and found that among these 4,952, there were 4,030 cases in which at least one subsequent R01 or R21 application was submitted by these same PIs. We used text-mining software to determine the word and concept frequency of the titles, abstracts and specific aims of the subsequent applications and found that among the 4,952 unfunded applications, there were 1,090 cases in which at least one later application was more than 80% similar: these cases are counted as “any virtual A2s.”

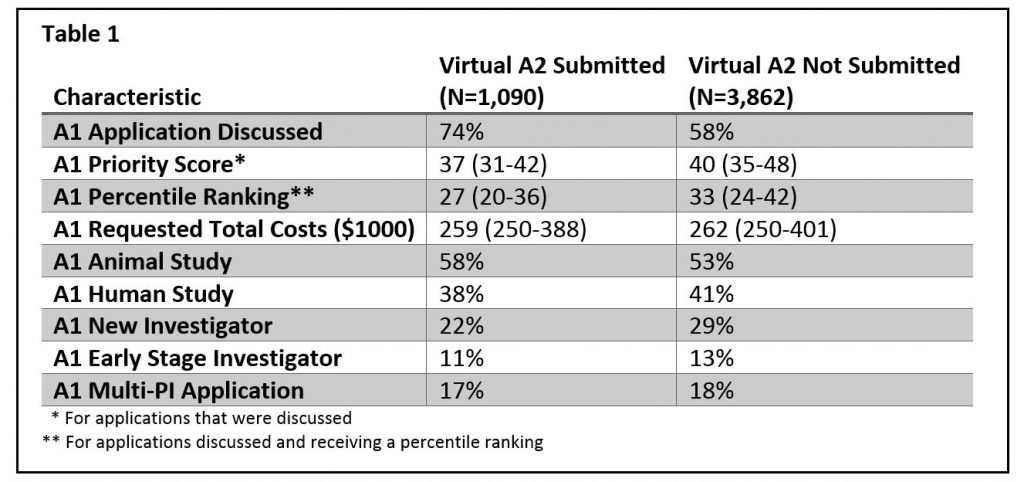

Table 1 shows the characteristics of the baseline unfunded A1 applications according to whether they were followed by at least one virtual A2 application. As might be expected, PIs were more likely to submit a virtual A2 if the unfunded A1 had been discussed during peer review, and if the scores (if discussed) were better. New investigators were also slightly less likely to submit virtual A2s.

The figure below shows the events following unfunded A1 applications. Of the 1,090 cases in which at least one virtual A2 application was submitted, 219 – or 20% – had at least one application funded.

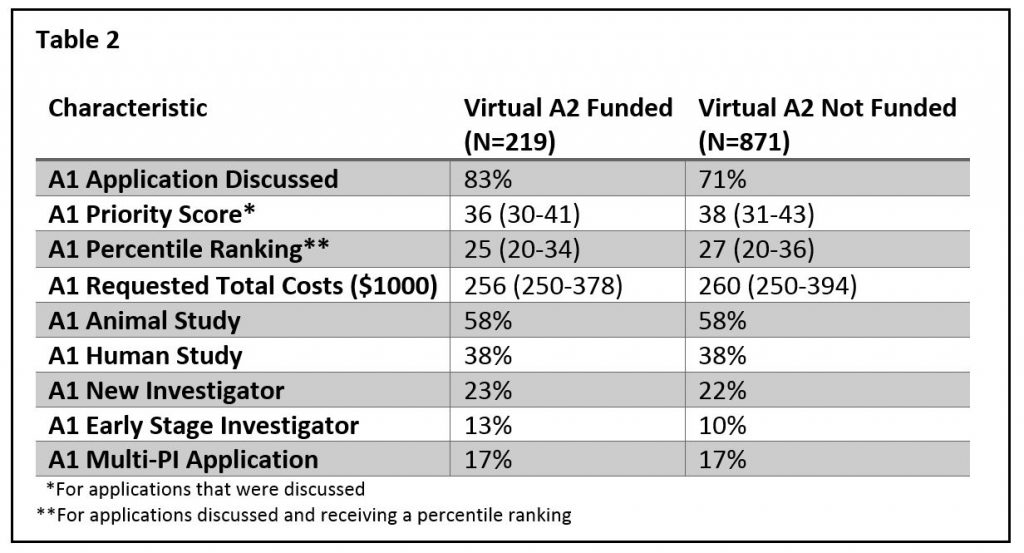

Table 2 shows the characteristics of the baseline unfunded A1 applications according to whether at least one corresponding virtual A2 application was funded. As might be expected, over 80% of the unfunded A1s that resulted in a successful virtual A2 were discussed in peer review groups. Otherwise there were no major differences.

In summary we found that:

- Investigators are taking advantage of the new policy. For about 22% of unsuccessful A1 applications, we see a materially similar subsequent submission, what we’re calling a virtual A2.

- Investigators who fail to obtain funding on an A1 remain active. We saw that 80% of the PIs with unsuccessful A1 applications submitted at least one application within the following fiscal year.

- A small number of virtual A2 applications are successful.

Returning to the question that the researcher asked me a few months ago, his story is not all that atypical: the percentage of virtual A2 applications that are funded is similar to that of de novo applications. There does not appear to be any special advantage from previous submission and review. However, there does not appear to be any disadvantage either; it appears that the policy is allowing for a small, but real, number of second-time revisions to get their chance.

I am most grateful to Andrei Manoli, Judy Riggie, and Rick Ikeda for their invaluable work on these analyses.

What percentage of all grants funded are A0, A1, virtual A2, virtual A3?

What does this say about review- I would suggest that it indicates what Drugmonkey discusses much, there is an informal queue system. A0s are treated more critically than A1s. Proposals that score well but just under line are reviewed essentially worse when virtual A2s. Probably likely that if there were A2s, these proposals would have scored better. Another interesting analysis would be analysis of virtual A2s that were undiscussed A1s. These would be liberated from the queue as well as virtual A2s, and perhaps could also show a greater range jump in scoring than say an undiscussed A0 that was submitted as an A1.

When I sat on a review panel this wasn’t the case for most reviewers. Maybe some function that way, but most looked at the grant fresh. However, having comments from the previous round of reviews can help an applicant prepare a clearer grant, and maybe that accounts for the effects.

My experience was essentially the same as the example used. The A1 scored at the 12th percentile but was not funded. NICHD’s paylines was the 9th percentile. Submitted a new application incorporating the second set of critiques which went to the same study section as the A0 and A1 and it scored at the 50th percentile. The system is broken period!

Sorry to hear that. This big disparity suggests that efforts should be made on

making the reviews approximately reproducible. This is a hard problem for sure

but definitely worth it. Data reproducibility is necessary for the progress of

science. I would argue the same for review consistency, appreciating very well

that it is not exact science but it should not lead to going from 12th percentile to 50th

OR as was stated for a grant in a later comment where it covered a broad range of

possibilities (15% to triage). May be someone from NIH can comment on what

specifically is being done to remedy this.

The problem with the previous policy is that there were very good proposals that could not be resubmitted even when somebody was not funded by having a 15 percentile.

The problem with the current policy is that there are bad proposals that can be resubmitted “ad nauseum” increasing the burden for reviewers. Moreover, now, knowing that you never will “totally kill” a grant, reviewers may give a ok score thinking that “she or he can submit again”

I think the best way will be to allow resubmission for a third time when you receive a decent score. I don’t think that being able to resubmit after being triage twice (or triage and >30 percentile) does anyone (even the applicant) any good.

My thanks to those who undertook this analysis since it provided me with the very useful information that a research project is not necessarily dead once the A1 is rejected. My question would be “why”?

Did the success of the virtual A2’s depended on the alterations made in response to the A1 review (which, to evaluate, would need substantial and perhaps questionable comparison of the reviews to the A1’s and to the comments). Or, are the virtual A2 applications successful because they became disconnected from their prior reviews/reviewers, and now can be seen more favorably in an independent light, unbiased by the initial reviews (needs to be compared to the presumably lower?? historic success rate of A2’s, relative to funding line variations, before A2’s were discontinued). Both answers are interesting in terms of helping the researchers define how to respond to a rejected A1.

As a reviewer, we are not supposed to refer back or even mention the history of an “A2.” It is more a function of the latter point made by Fred; that is, fresh eyes see the application in a better light AND (likely) the crop of applications are, overall, less competitive. This point is overlooked; sometimes we see a very good crop of applications making it very difficult for virtual A2s to break in; other times, the crop of applications is not so great. SO it is more than just a function of reviewer bias; it has as much to do with the overall quality of the applications in which the A2 is competing against.

One thing I notice in table 1, is a pretty big difference in whether a virtual A2 was submitted, depending on whether the PI had NI or ESI status.

This might suggest that NI/ESI are more willing to throw everything out and start again from scratch (i.e. go “real” A0 again), rather than re-hash the aims as a virtual A2? It’s pretty well established that flexibility declines with age.

OR it might suggest the NI’s had their chance and are no longer in the system; less able to continue submitting applications, compared to those with more established careers (tenure?) who can continue submitting the same/similar grant until it sticks?

Either way, very interesting study, and thanks for sharing!

NIH identified virtual A2s and put into the category that will not be treated fairly.

For example, my A1 was scored pretty good, then I did improve based on the reviewers’ comments, then submitted another R01, surprisingly, it was not discussed. Unbelievable!!

That is why it makes me think NIH will group those Virtual A2 into different group, and give less consideration.

Do you think NIH will allow you to submit similar grants for many times? that is not true. They want to reduce the effort by eliminating the virtual A2s.

Wasn’t the initial rationale for allowing unlimited R01 resubs that it the A0/A1 was disproportionately hurting New Investigators? Seems like New/Early Stage Investigators aren’t taking advantage of this (but doing about the same on the A2’s submitted if I’m reading table 2 right).

It’s worth considering the other possibility – that this results from random chance in the review process – percent funded out of a bunch of good applications always stays the same….

I think you hit the nail on the head! There is a random chance at each round that the grant will be funded. For an A1 there should a small improvement (not for every grant, but on average). A large negative deviation should give concern. For the A2 is totally random again.

It is interesting how short NIH’s memory seems to be. I can remember when A2s were real, and sometimes study section would see grants as “virtual A3s”. Anyway, I am so glad that NIH reversed the draconian policy of not allowing the virtual A2. As 20% of these are funded on the new A0 submission, it does show the vagaries of peer review (same as the original situation where the virtual A2 was triaged while getting decent scores as A0 and A1), but that triage may have happened because the reviewers recognized the grant from the prior submissions and wanted to send a strong message.

However, as 20% of the scientists with the unfunded A1 did not submit again within a year (a 1000 PIs!), it would be a very important to find out who these folks tend to be and why they did not resubmit. Was it that they lost their job? Left academic science? Were so discouraged that they have given up? Got funding from another source (ie NSF, DOD etc)? How many of these were from underrepresented groups and are now lost from the biomedical workforce (NIH’s own numbers say that underrepresented scientists are less likely to be successful in the bid for NIH grants)….

I may become one of those 20%. I had R01 funding from 2006 til 2010, NCE til end 2012, unsuccessful competing renewal A0 and A1; submission/resubmission of at least 6 other applications, alone or with co-PI. 21st percentile on my last A1 while trying to stay alive with small private foundation grants that are now finished. Lost my personnel, now on somebody else’s grant as co-investigator, runs out next year, will probably not be renewed. Research track position, I was told if I don’t get funding I am out. I perform all the duties of a tenure track, mentor students, postdocs, committee work, regular NIH ad hoc reviewer, write grant applications for other colleague (among which 2 T32 apps). My lab space has not yet been taken away mainly because it was crappy to begin with, and as research track I am flying a bit under the radar of the local powers that be. Facing the thought of submitting a virtual A2 next cycle, and getting triaged again leaves me exhausted, running on empty and completely demoralized. I don’t have $ to perform more preliminary work, and my project has been lingering during 6 years of NIH non-renewal. Only one other person in the US works on the same subject, and an R21 with this person as co-investigator also got shot down. My latest critiques include remarks such as “well written” etc but the criteria “incremental”, “limited significance” and “modest productivity” are becoming very pervasive in the reviewer culture. I have no money, no personnel but I still manage to publish a few papers per year. This is called modest productivity. Translational people have a tendency of attributing limited significance to mechanistic studies. Not everybody needs to cut up rats and mice to present significant research. So, unless a miracle happens, I will be 59 and out of a job next year. Welcome to science. Thanks for letting me blow off steam!

As a reviewer, all I see is the negative of even more grants to review. As the workload increases, high-quality, experienced reviewers increasingly opt out of reviewing, and as inexperienced reviewers or reviewers without adequate knowledge hand out biased or unfair reviews, the number of “virtual A2s” continues to increase resulting in further degradation of the quality and fairness of reviews. Our system is unsustainable.

A critical point: it is usually much less time consuming to revise and submit a virtual A2 than to develop a brand new A0. Thus, if ” the percentage of virtual A2 applications that are funded is similar to that of de novo applications”, then it is more time efficient to submit the virtual A2. As a PI who has had a virtual A2 funded, it is also clear that program enthusiasm for the proposed project is a key element to eventual funding.

Over the past 8 years I have been through the NIH funding yo-yo twice while getting a grant I have held for 18 years reviewed and renewed. I am now faced with submission of a virtual A2, but may get my A1 funded???

My lab is very productive and we have a track record of publishing in high profile journals. Our work is also at the interface between basic science and medicine and we are considered leaders in our field. Yet, in each of the past instances of NIH review, our grant was given an average score during the A1 and then in the A2 was given a score on the edge of the payline. In the first instance the grant was eventually funded and the lab. is now waiting on our fate for our current A1 attempt at renewal. We may get funded again or submit an A2, but the result of these experiences is that my group has shrunk from a sustainable size to a skeleton crew, and the folks in the lab. are super stressed. Even if my current renewal gets funded I’m not sure how to staff the lab. We are doing what I think is our best work, but my current staff is likely to leave for a less work stressful culture, so even if we get renewed via an A1 or A2 mechanism it seems like my lab is going to close in the next few years.

Thanks for this informative analysis! The question of whether the A0 and A1 reviewer responses end up being useful could be addressed by seeing if there is a relationship between % text similarity and funding. One might imagine that a higher %text similarity between A1 and virtual A2 (i.e., not that responsive to comments) would have a lower funding success. This would actually be comforting if true.

My experience: A0=36%, A1=15%, A2=triage, A3=33%. I have sampled almost the entire scoring range.

The results of this analysis offer further evidence that the system is broken. If reviewers are genuine in their criticism and applicants respond appropriately, then scored A1s resubmitted as virtual A2s should have improved success rates. What we have is a system with no reviewer fidelity (the ability of constructive reviews to result in changes in the application that improve success rates). Reviewer fidelity is best accomplished with the same reviewers seeing the re-submission. This often is NOT the case. How many R-series grants are scored as A0s and then not discussed as A1s?

I recently got the summary statement for my SC1 grant- I got the surprise of my life. The reviewer #1 did not even knew the eligibility criteria. I really suffered and I know that my PO will also be not helpful as no one wants to fix the system.

There was a time, “long ago”, when NIH grant reviewers were required to have NIH research grant support for their own research programs. It made sense, since full objectivity is difficult to achieve when reviewing a grant in one’s own research area (the reviewer expert) , if the reviewer cannot obtain any research funds for their own, complementary research!

Do plots of grant fund dollars available (corrected for inflation), research grant award rates, total number of reviewers and % reviewers with NIH research funding vs. time reveal any properties helpful in understanding the current R01 grant review and award issues being discussed here? (As an Emeritus Professor, no longer a PI on an NIH R01 grant, I am concerned about former Trainees and their contemporaries whose research is supported by current NIH R01 grant awards.)