53 Comments

Cross-posted on the Review Matters blog from the Center for Scientific Review.

NIH has issued a request for information (RFI) seeking feedback on revising and simplifying the peer review framework for research project grant applications. The goal of this effort is to facilitate the mission of scientific peer review – identification of the strongest, highest-impact research. The proposed changes will allow peer reviewers to focus on scientific merit by evaluating 1) the scientific impact, research rigor, and feasibility of the proposed research without the distraction of administrative questions and 2) whether or not appropriate expertise and resources are available to conduct the research, thus mitigating the undue influence of the reputation of the institution or investigator.

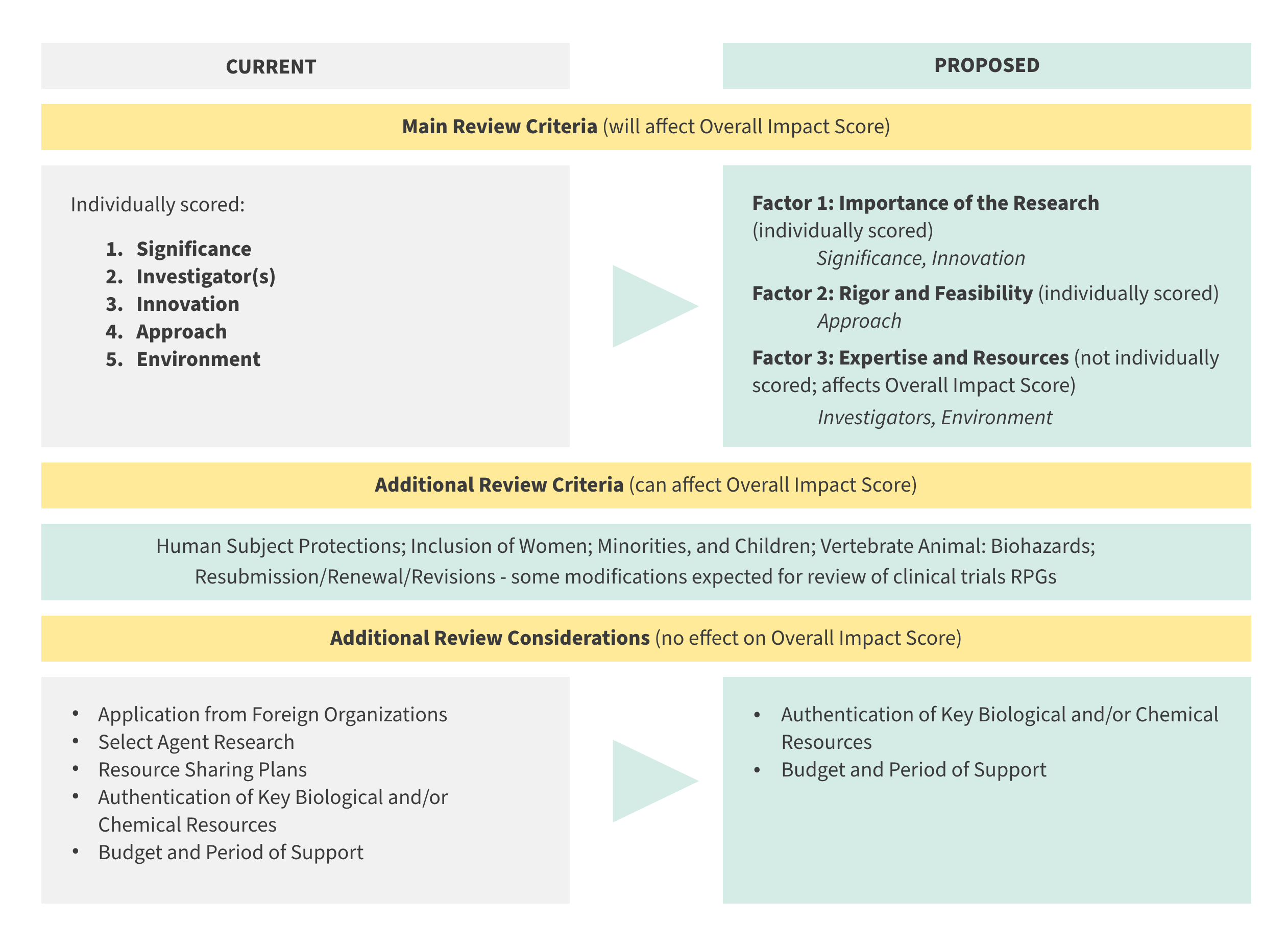

Currently, applications for research project grants (RPGs, such as R01s, R03s, R15s, R21s, R34s) are evaluated based on five scored criteria: Significance, Investigators, Innovation, Approach, and Environment (derived from NIH peer review regulations 42 C.F.R. Part 52h.8; see Definitions of Criteria and Considerations for Research Project Grant Critiques for more detail) and a number of additional review criteria such as Human Subject Protections.

NIH gathered input from the community to identify potential revisions to the review framework. Given longstanding and often-heard concerns from diverse groups, CSR decided to form two working groups to the CSR Advisory Council—one on non-clinical trials and one on clinical trials. To inform these groups, CSR published a Review Matters blog, which was cross-posted on the Office of Extramural Research blog, Open Mike. The blog received more than 9,000 views by unique individuals and over 400 comments. Interim recommendations were presented to the CSR Advisory Council in a public forum (March 2020 video, slides; March 2021 video, slides). Final recommendations from the CSRAC (report) were considered by the major extramural committees of the NIH that included leadership from across NIH institutes and centers. Additional background information can be found here. This process produced many modifications and the final proposal presented below. Discussions are underway to incorporate consideration of a Plan for Enhancing Diverse Perspectives (PEDP) and rigorous review of clinical trials RPGs (~10% of RPGs are clinical trials) within the proposed framework.

Simplified Review Criteria

NIH proposes to reorganize the five review criteria into three factors, with Factors 1 and 2 receiving a numerical score. Reviewers will be instructed to consider all three factors (Factors 1, 2 and 3) in arriving at their Overall Impact Score (scored 1-9), reflecting the overall scientific and technical merit of the application.

- Factor 1: Importance of the Research (Significance, Innovation), numerical score (1-9)

- Factor 2: Rigor and Feasibility (Approach), numerical score (1-9)

- Factor 3: Expertise and Resources (Investigator, Environment), assessed and considered in the Overall Impact Score, but not individually scored

Within Factor 3 (Expertise and Resources), Investigator and Environment will be assessed in the context of the research proposed. Investigator(s) will be rated as “fully capable” or “additional expertise/capability needed”. Environment will be rated as “appropriate” or “additional resources needed.” If a need for additional expertise or resources is identified, written justification must be provided. Detailed descriptions of the three factors can be found here.

The following “Additional Review Criteria” will remain largely unchanged, not scored individually but continue to affect the Overall Impact Score. A drop-down option of “Appropriate or Concerns” will be provided, with a justification narrative required for concerns.

- Human Subject Protections

- Inclusion of Women, Minorities, and Individuals Across the Lifespan

- Vertebrate Animal Protections

- Biohazards

- Resubmission

- Renewal – Evaluation of productivity during the previous project period will remain unchanged and will continue to affect the Overall Impact score

- Revisions

Other minor changes to the peer review framework are proposed that will reduce time effort required by reviewers. “Additional Review Considerations” are items that peer review is currently tasked with evaluating, but which are not scored, and which do not affect the Overall Impact score. NIH proposes to move select Additional Review Considerations out of the initial peer review process. Under proposed framework, the remaining Additional Review Considerations are:

- Authentication of Key Biological and/or Chemical Resources:

- Drop-down option with a choice of “Appropriate” with no comments required, or as “Concerns,” which must be briefly described.

- Budget and Period of Support:

- Rated as “Appropriate,” “Excessive,” or “Inadequate”; the latter two ratings require a brief account of concerns.

Through the RFI, NIH continues to seek further public input on the proposed changes before moving forward with implementation. The RFI will be open for a 90-day period, until March 10, 2023. We look forward to your comments.

You should carry this out ASAP. I’d happily explain more over the phone, but this is a FAR better approach to reviewing.

This feels right- as I think most reviewers are already reviewing with these criteria – except having to phrase them differently and put them in different buckets. One of the challenges which is probably difficult to tease out, is the impact that ‘feasibility’ may have on significance.

I agree that we should move to this framework for review as soon as possible. The emphasis on “should this be done” and “can this be done well” get at the heart of what proposals should be conveying.

Reviewers should be focused on finding the proposals that will have a large impact on human health. We shouldn’t be so focused on the Nth technically flawless study of X gene or pathway, for example, when a riskier study on a groundbreaking topic could have greater impact.

I also think the recommendation that reviewers focus on writing the major score driving strengths/weaknesses will reduce ambiguity when interpreting summary statements.

One thing I do think should be re-emphasized is that “Innovation” should not be limited to “techniques”, but also on concepts, theories, or application. Some reviewers focus too much on using the latest technical trends without considering whether these add new value, are cost-effective, or won’t just retread already known information.

Completely agree!

This is a very common problem with bad reviewers. Because it takes a lot of time to know whether any concept is innovative or not, reviewers just search for technical innovation, such as “scRNA-seq”….

Personally, I find the emphasis on “innovation” has led to a rise in saltatory research, where investigators simply jump from one hot topic (or one hot technique) to the next instead of following up on their prior work. It might lead to more high impact papers per grant, and then more grants per PI but is that really our only goal? Moreover, it is far easier to do if one has a large lab and lots of funding already – for someone with a small lab to sink a lot of resources into a brand new area of research with no guarantee of success is often not possible. I am currently putting together an application for a second clinical trial of a drug I have developed for a different but somewhat related indication – since we already have one trial running, I suspect I will get comments about this not being innovative. Does that really make sense?

You would think that treating a disease would be the highest impact outcome there is …

Yes, I agree. The vague emphasis on innovation has mostly resulted in journal impact factor chasing (and the firehose of unreadable articles no one can keep up with).

All who are supporting these changes here should also take the time to post their comments to the RFI! I am a Professor of Biology at UNC-Chapel Hill, who has run an NIH-funded lab for 31 years. I have served as a member of several Study Sections and also served on the Advisory Councils of both NIGMS and CSR. I strongly support these changes. They will refocus review on the issues relevant to the scientific advance (Importance, Rigor, and Expertise) and reduce the influence of the perceived “prestige” of the applicant and their Institution.

the link above to the RFI takes you back to this page. Is there another comment site that you are referring to? Regarding review criteria, the budget should be given high priority in certain cases. For example, if the research plan calls for use of 200 mice to achieve adequate statistical power, but the budget is maxed out and includes funding for only 20 mice, then the project cannot succeed as planned and this proposal should be given a low overall score. The research sharing plan is something that we can do without in the current circumstance where sharing is not enforced. Because these are typically not enforced, trying to get data from researchers is often fruitless, thus having a section on it just wastes time for the writer and reviewer.

Hi, you can submit comments to the RFI at the bottom of this page: https://rfi.grants.nih.gov/?s=638509b5409baa49f803e572 Thanks for your input!

the last line hyperlink (“We look forward to your comments.” ) should link to the RFI:

https://rfi.grants.nih.gov/?s=638509b5409baa49f803e572

They might have edited it to correct the link

This is a positive move by NIH. I’m a current reviewer and often wish for both simplified criteria AND format for reviews. This seems to accomplish both. Please *require* reviewers to justify scores other than 1; too often reviewers will give a score of 2-5 without explanation or context, impairing an applicant’s ability to develop a successful revision.

The Innovation section generally needs more thought and perhaps more specificity; this criterion seems to get tangled up with both approach and significance. My sense is reviewers often consider innovation to be primarily innovative methods, and negatively score if these are not “new” even if the application is focusing on a bold new idea or exploring new interventions or relationships. One way to address this would be to make this a standalone yes/no criterion based on evidence of innovation in research question, conceptual framework, methods, or analytic approach.

I completely agree and would go even a step further by disallowing methodological innovation to count towards innovation

Brilliant. Eliminates a lot of the Nobel prizes in biology and medicine.

in regards to requiring justification for scores, these explanations are also critical for the rest of the reviewers for both to improve their reviewing skills, and to establish that there really was a reasonable justification for the scores. This should help remove bias towards famous PIs or institutions.

I like the changes!

This reform is a welcome change that puts the focus of the review on the merits of the proposed research. The current review framework is an artificial construct that sometimes diverts reviews from what should be the main focus.

Subjectivity is an important part of humanity. However, its use in the review of grants and contracts must be tempered. The proposed changes appear to address this issue and should be welcomed by all.

My only concern with the proposed changes is that it does not go far enough. Saying that “Factor 3” will not be scored does not remove the potential for subjectivity influencing the key deciding factor for funding; the “Overall Impact Score”. Why not make submission anonymous to the reviewers (no names, biosketches) with the CSR conducting the initial evaluation of the Investigators and the Environment when determining if the application is appropriate for review? Expertise is not always dependent on the time a researcher has spent in the field. The expertise of the applicant should be reflected by the quality of the application being reviewed. Finally, I agree with the other posted comments that “Innovation” is another problem faced by both the applicant and the reviewer which needs to be addressed. Combining it with “Significance” is a step in the right direction.

I agree and support the suggestion from Alan Johnson to make the submission anonymous to the reviewers. Although one could write the proposal in such a way to make it obvious to the reviewers to identify the applicant, it helps to minimize direct bias.

I agree that the proposed changes are appropriate, as the assessment of the applications should focus on the scientific merits of the proposals, not the prestige of the investigators or the institutions. As a reviewer I already practice this when I decide a final score, but it will be better that the rules are changed, and the focus is put on the proposals by every reviewer.

I think this is overall a reasonable suggestion. The apparent equivalence in the current criteria are out of balance. Naively it might have put too much weight on the institution and probably too much weight on investigator. However, in a more nuanced sense the investigator’s qualifications should be taken into account but not so much as to penalize the young investigator. I am only concerned that the specific area of expertise is probably less important for some investigators than a track record of moving into new fields and adding new perspectives. This should be encouraged. Thus the “qualities” of the reviewer’s science when known should be taken into account. I think these proposed changes are apt to free the reviewer to think more broadly about the science but they should come with some instructions or examples to make clear what is meant.

I think you could go a step further and removing Factor 3. NIH has vetted the Institutions (Environment) that can apply for Federal Grants. Therefore, this vetting is, in my opinion, a certification that these Institutions provide environments apt for biomedical research. In addition, the Institutions have vetted PIs as individuals able to carry out, in their name (grant are, unfortunately, assigned to the Institution and not the PI, which I think is a mistake). Therefore, potential concerns about the PI’s ability to carry out the specific project should be raised in Factor 2 (Rigor and Feasibility).

I also think you may want to consider allowing scorings using decimals (i.e., 1-1,1-1.2 etc) to better spread the scores.

While the proposed change addresses subjective bias to some extent, it is not an adequate safeguard to avoid it completely. A better alternative would be to have an anonymous scientific review (significance, innovation, and approach) with evaluation of investigator and environment done in a secondary review by NIH personnel. This will also allow ESI and new investigators to have equal opportunity.

I also suggest combining significance and innovation as one criteria to allow highly clinically significant projects without much technical innovation and those with tremendous innovation but limited immediate clinical implications to be more successful.

I don’t think keeping the applicant anonymous is feasible. In Approach and Significance, the applicants have to explain their previous work and support it with references, which will completely give up their identity.

The problem I see for SBIR reviews is the selection of review committee members without consideration of their expertise on the topic at hand.

This change will help to some degree the less established investigators and all investigators from less powerful centers. It can be argued, however, that the key issues of the review process currently are:

– too narrow range of the scoring point system (1-9). In reality the range is 2-5/6 for >95% of applications with the majority being in the 3-4 range. This leaves a lot to chance who gets funded. The previous range (1.0- 5.0) with 50 potential scores (e.g. 1.8, 1.9. 2.0. 2.1 etc) was much more nuanced, permitting much better ranking of the applications. Introducing 0.5 points to the scale 1-9 scale (1.0, 1.5, 2.0, 2.5, 3. 3.5, 4.0, etc) would address this persisting, major problem in the current scoring

– variable quality of the reviews. A single reviewer (out of 3 assigned to application) can adversely affect the score, even when his/her criticisms have little merit.

Therefore, quality of the reviewers including their understanding of translational significance of the projects and their potential impact on clinical practice is of paramount importance. The recent very strong stress by NIH on introducing young and, hence often, not very experienced, investigators and other selected groups as Study Section reviewers, leads to arguably excessive underutilization of experienced investigators as reviewers. Please do not get me wrong: I am not suggesting that certain priorities should not exist but one could argue that the proportions between the “new” and “old” blood should assure the highest quality of the reviews and the transition process should be gradual.

1) Continue using Zoom or another Web-based platform; this greatly reduce bias during review process.

2) The use of Zoom allows including unlimited number of reviewers; Because in a fair system all grants should be discussed, not just 50%.

3) Every study section should have 2 or more study chairs, a jury-based fair system.

4) SRO of a study section should only be for 1 or 2 years, but no more than that; this will also greatly reduce bias.

These are crucial factors for a fair and less bias system than currently available.

Your takes are interesting, BUT:

1. CSR likes zoom meetings because online meetings save money for CSR. But reviewers are less engaged in discussions. Sometimes nearly half of the reviewers were black windows without any video feeds.

2. Unlimited numbers of reviewers and no triages of applications are never going to happen. Some applications were just awful and do you really want all other panel members to pile on to add more flaws?

3. Chairs don’t really do much and there are always backup chairs at the meeting.

4. So you like anarchy in study section meetings?

Totally agree; All are important points.

I would also add that Study section members should also be changed every 2 years.

Agree with the Zoom/web-based platform ability to reduce bias– it simply widens the pool of potential reviewers by allowing people to participate in the review process, even in cases where they may have personal responsibilities that make very difficult to attend in person.

Agree that separating review of Factors 1 & 2 from 3 would further act to reduce bias.

Another change that would greatly simplify the re-review process would be to allow the grant writer greater space for the reply and require a point-by-point response similar to virtually all journal article re-reviews. As a grant applicant, this would provide a fairer process, and as a reviewer it would be make it much easier and quicker to see how the applicants addressed the prior concerns.

Clearly something needs to be done. Is this it?

Whats really new? I see the same 5 criteria bundled into 3 different bins.

In the end the system is only as good as the reviewers. IMHO that’s where all the truly important flaws are and that is where the focus should be.

I completely agree and strongly urge NIH to realize it staring from 2023.

I also suggest to eliminate the standing membership of the CSR Study Panel. This policy helps to cultivate bias and small exclusive interest groups.

Vertebrate Animal Protections: The number and severity of USDA and PHS animal welfare violations suggests that this section needs toughening-up.

Agree with Wasik, above.

None of this addresses the most serious problem in my view: reviewer accountability. Any single reviewer can trash a proposal, leaving it undiscussed, and then there is no check on comments that are careless, unfair, or just plain wrong. At the very least, an applicant should have an opportunity to fully respond to criticisms – even if it takes more than one page, even if it’s an A1. We can all learn to take criticism – both fair and unfair – and respond in a constructive way. When we get unfair criticism with no opportunity to respond, however, it’s just discouraging to the youngest, most creative, and most ambitious of our colleagues. I’ve seen a lot more discouragement than encouragement come out of study sections.

I agree with Singlestone’s comments on potential flaws due to reviewer accountability. The suggestion to allow for the option of more than a single page rebuttal from the applicant in order to adequately respond to reviewers’ criticisms is critical. In my experience, it can be impossible to accomplish this important task in one page.

A long overdue change to scoring criteria. Two thumbs up!! The criteria for selecting suitable reviewers should also be re-evaluated.

Something more to consider– even non-discussed applications deserve a proper explanation of the preliminary scores given. It is often the case that a grant application receives a 6 or 7 in one of the criteria without providing any text explaining what drives this preliminary score. Something that will help eliminating this type of behavior is requiring text explanation for every score given (you don’t need to provide a thesis dissertation of every score, but yes some kind of justification for your scoring decision). If the review submission cannot be completed without providing at list a sentence for each score given, a number of difficult to justify/unfair score tendencies will likely disappear, as the “shocking” score will need to be justified with some explanation always.

I agree with many of the earlier comments about the positive aspects of grouping Significance and Innovation into one Research Importance category. In my 30+ year history with NIH grants, I’ve seen a constant push by NIH to reward people doing riskier but higher impact projects, with this push leading to changes in proposal format and scoring. Ultimately, though, all these new formats of proposal and reviewer criteria meet the resistance of study sections faced with making difficult choices among many deserving projects and favoring the “sure” thing versus the highly innovative but high risk projects. I full heartedly support this push for innovation and believe it is necessary to counteract the natural conservatism of study sections in tough funding climates. At the same time though we need balance and we also need to fund the less innovative but highly significant research directions- just as a good investment policy spreads wealth among a portfolio of both low risk, steady income and high risk, potentially high growth assets. One unintended negative consequence of the previous format was that it gave a big boost to new grants exploiting a recent discovery (ie Innovation) as opposed to grants that progressively tackled an important biological question/problem through steady and progressive long-term investigation. There was pressure to run with new directions even in competitive renewals rather than taking a systematic and steady approach to an important problem. I believe by grouping Significance and Innovation together, this will help with the idea of seeking a balanced portfolio of grants that includes both “safe” incremental progress on a highly significant and productive research direction as well as some grants that are risky but involve innovative new concepts and/or method development.

I fully agree with the previous comment. Placing more emphasis on the scientific value of the application itself is likely to diminish the role that unconscious biases play in the evaluation process. This is likely to result in the best science being the one selected for funding.

I am an NIH reviewer and I think this is a good move, esp. if this will reduce the focus on the applicant and his/her institution’s prestige.

A really good move would be a double-blind review. Reviewers do not need to know the name of the applicant to decide if the idea is significant and innovative or whether the approach is rigorous. Or maybe make it a 2 step process where reviewers will see the name of the applicant only after they have evaluated and ranked the scientific merit of the proposed project.

Love this idea. Assessing the science and preliminary work with the investigator and institution de-identified will help separate the science from the halo.

Overall, the proposed changes appear to be a minor reshuffling of the existing system that may not result in significant net positive change in the product of the review process. Most importantly, the changes don’t address the elephants in the room, which are the reliability and quality of reviews.

Review reliability could be improved by providing clear rubrics, examples of how to apply them, and better reviewer training. Please consider providing accompanying guidance (even better, rubrics) for how these new criteria should be applied to different types of applications. For example, how is Rigor defined for a Phase I feasibility study or for an SBIR Phase IIB project? Too often, applicants and reviewers are left to interpret fuzzy guidance, resulting high variance between reviewers (poor inter-rater reliability).

Review quality starts and ends with the reviewers themselves. The SROs have a very difficult job of recruiting and retaining quality reviewers, who are usually spending after-hours, unpaid (or virtually so), time to perform their role. Most of us volunteer based on a desire to contribute to the research community, but end up getting burned out after a few cycles. Time is precious. The NIH might consider instituting some type of merit/recognition/rank/reward system for reviewers based on the quality and quantity of reviews (as determined by SROs). Such recognition could be seen as a positive in academic progression, and thus motivate potential reviewers to volunteer and put in more effort then they might otherwise. Or, you could put a reviewer rank/score as a mandatory field on the biosketch. If you really want motivated reviewers, you could associate their reviewer score in some small way with a higher level of consideration for their own grant applications. The entire community would benefit from a highly-motivated and plentiful cadre of highly-qualified reviewers!

A long overdue change. Eliminate the “Investigator” too, for the same reason (the PI’s reputation detracts from the application’s merits).

An open discussion on the Review Criteria that has the potential to benefit the reviewers as well as the researchers is certainly a step forward. Let’s also get some discussion on another equally important issue that would benefit the review process–Here is an excellent set of suggestions that gets at the heart of the frustrating problems researchers face as part of the review process–

Aslin, R. N. (2022). Two changes that may help to improve NIH Peer Review. Proceedings of the National Academy of Sciences, 119(51).

Will these changes be applies to SBIR/STTR grants (R41,R42,R43,R44) as well?

What is the problem we are trying to fix? Surely it is to get fair reviews to judge the best science and to reduce the burden on reviewer and submitters. The current proposed changes seems to be a minor spreading the deckchairs rather than providing any fundamental change. Dealing with inter-reviewer variability is an issue that is important. No one minds if reviewers make fair comments and the scores are appropriate. The problem is when some reviewers are unreasonable or uninformed, and I suspect this is recurring issue with some reviewers that could be identified and fixed. Reviewer burden and submitter burden is an important issue that could be easily addressed. I have suggested (PMID: 26676604 DOI: 10.1126/scitranslmed.aab3490) that submissions should consist of just the science pages, the biosketches and an abbreviated budget. The other stuff could be requested and reviewed only for potentially funded grants. Currently there is a massive waste of time on all the non-science sections spent on for the majority of grants that are not funded. Folks might not remember or know about the “old days” when one had to have the IRB approved at the time of grant submission. Thousands and thousands of hours wasted on IRB approvals for grants that were never funded. Its sounds incomprehensible now, but we are in the same position now for paperwork that is not the science of the grant.

The propose changes on the review criteria represent an improvement over the ones being currently used.

I strongly support the changes, which should be implemented as soon as possible.

Current criteria emphasize Innovation, a criteria that I think it is very frequently being misused.

I have heard many times at Study Sections reviewers stating something like “the use of proteomics is not novel”, or more generally “the use of XXXX technology is not novel”, when in many cases the proposed experimental procedures were sound and the most appraise. Very rarely a grant application proposing interesting research also uses truly novel experimental procedures, and sometimes reviewers very casually penalize the application because the lack of innovation.

The proposed changes are welcome but won’t take away the bias in the review process. It is quite obvious that some well connected people keep getting the NIH money for decades, without showing the promised results. The new people cannot get entry unless a well connected mentor opens the door for them. There should be some element of anonymous review as the first step. The pool of NIH reviewers needs to be expanded. The current system seems as if people are scratching each other’s back.

There are so many excellent aspects to these changes. First, aggregating significance and innovation tempers both the pendulum swings from avante garde but un-doable, and necessary but predictable. Coupling rigor and feasibility hopefully will weed out some of the enticing grantsmanship that is actually not in the investigators’ capacity (or the realm of possibility). Finally, I HOPE the expertise and resources section could be coupled with linking to some of the centers of excellence if needed, rather than downgrading the submission due to a less robust institution. To expand the pool of researchers with good ideas from just the top institutions, access to related documents should be blinded until the first two sections are complete. Specifically, blinding the name/training of biosketches and separating environment and facilities could be done without losing the information needed to see the feasibility of the team completing the project.

I echo previous comments re: blinding or revising the format of the application pdfs. After taking the recent NIH training on bias in reviews, it struck me how the pdfs we are given to review provide the information in an order that increases bias: the very first thing you on the front page is the Institution. Then, after a brief abstract, you skim through Facilities, Equipment and read Biosketches before you get to the Specific Aims page. At that point, you read the science in the context of having the institute/biosketches in mind. Maybe other reviewers are better at not letting the context bias their view of the scientific content, or at skipping to the science and refusing to look at the context before, but as a relatively junior reviewer, I found it very hard to not let the context affect my view of the science. Presenting the information in a different order, or partial/sequential blinding/unblinding, may be needed to decrease the impact of some of these subconscious biases.

My prediction: these changes are going to be just as effective as shuffling the deck chairs around the deck of the Titanic. They are minor tweaks to a massively cumbersome system, and they will do little to make it less cumbersome. Grant writing is incredibly burdensome for both grant writers and grant reviewers, and that will not change just by tweaking the scorable categories. There is so much that could be streamlined, but it would take a large cultural shift at the NIH. For example, whether anyone wants to admit it or not, much of the decision to fund boils down to an investigator’s reputation and track record. So why not allow those investigators with good track records to just skip most of the process, submit a CV and have some fraction of their funding continue more or less automatically? Of course there would have to also be a system whereby those without a track record to justify such an award could still compete for a chunk, maybe the majority chunk, of NIH money. But at least the reward after some years of success is that you would have more stable funding and less worry about having to drop your research program entirely (or even lose your job).

I fully agree with simplifying the review process by focusing on the key issues that will make a project a successful and useful contribution to our knowledge. Extraneous issues that do not contribute to an improved outcome should be minimized.

One of the major factors adversely affecting the review process, as suggested by some of the writers, is the complexity of the applications. Most applications get an hour or two of attention from the reviewer (often less) so much of the less relevant material be removed. Simplification and clarification of the review criteria will then help focus (in theory) on the key aspects of the project that will contribute to a successful outcome. But there should also be a remedy for reviews that are clearly erroneous, or as many colleagues have noted, claim additional material is required when that material is clearly laid out in the application. Unless there is a willingness to invest greater resources into the review process, simplification of the application and clarification of the review process is the next best choice.

To help the reviewers focus on the critical issues, instead of stating review criteria (e.g. significance, innovation) which are very subjective points presenting questions to be answered may be more effective. These questions might include

Is the question asked important?

Can the results have an impact on the field? (e.g. change our views in a meaningful way, provide a tool that will have broad application for research, provide key insights into a controversial topic)

Will the experiments provide clear answers to the question?

How will the results be useful to others? This point can include changing current views or practices or by providing new tools or approaches.

Will the data generated be clearly interpretable and likely reproducible (is the investigators logic solid)?

Is/are the investigator(s) capable of performing the experiments?

Do they have the resources to perform the experiments? Answer will be based on what they have available/direct access to, not on what exists in the greater institution. This question may level the playing field across institutions that may have widely differing resources.

Answering the questions may provide a better overall evaluation of a project. They should be enforced at the IRG meetings.

Imprecise terms such as “insufficiently mechanistic” or “lacks rigor” should be disallowed. They have frequently been used as catchall phrases to downgrade a project without providing any explanation on the basis (often they are used to denigrate a project without a clear explanation). Either the project can be done or not. The results will either be clear and interpretable or they won’t. Those are the key issues. Terms such as “insufficiently mechanistic or rigorous” tell other reviewers or the applicants very little about what specifically is wrong and needs to be corrected. Criticisms need to be justified.

Although anonymization of the applications is attractive, as it might remove or mitigate reviewer bias, it would also raise the question about the applicant’s expertise to perform the study. True anonymization would make the review process more complex than it already is.

These changes could have some impact on peer reviewer burden; however, the bigger issue is how the length of the applications have ballooned (e.g., human subjects section) and are continuing to balloon (e.g., requiring both the resource sharing section and the data sharing plan as of January 25). For human subjects applications, reviewers are now scouring the research plan, the recruitment/retention plan, the timeline, AND the human subjects section, for the information about recruitment, for example. As another example, information about the investigators can be found often in the budget justification, the facilities, the biosketches, AND the research plan. In addition, why is a massive Data Safety and Monitoring Plan required at submission? The PO often wants the Data Safety and Monitoring Plan in their unique format at the time of the JIT request, and all that the reviewer needs to know is whether it is an internal/external board and a few other minor details. Just scrolling through these massive files is time-intensive.

The review process could be much improved by requiring that reviewers indicate whether each bullet point is a major, moderate, or minor concern, and then requiring that the score is calibrated to these ratings. The summary paragraph then would not be necessary, because it would be clear how the concerns are score driving. I am amazed when I hear reviewers at study section list many major concerns and then give the application a 3.