24 Comments

In an earlier post we examined the number of competing applications for investigator-initiated research project grants (RPGs) over time and found that, in the past decade or so, most of the increase in submitted applications is due to more applicants rather than more applications per investigator. Nevertheless, we did see a slight rise in the average number of applications per applicant (from 1.3 in 1998 to 1.5 per year in 2011).

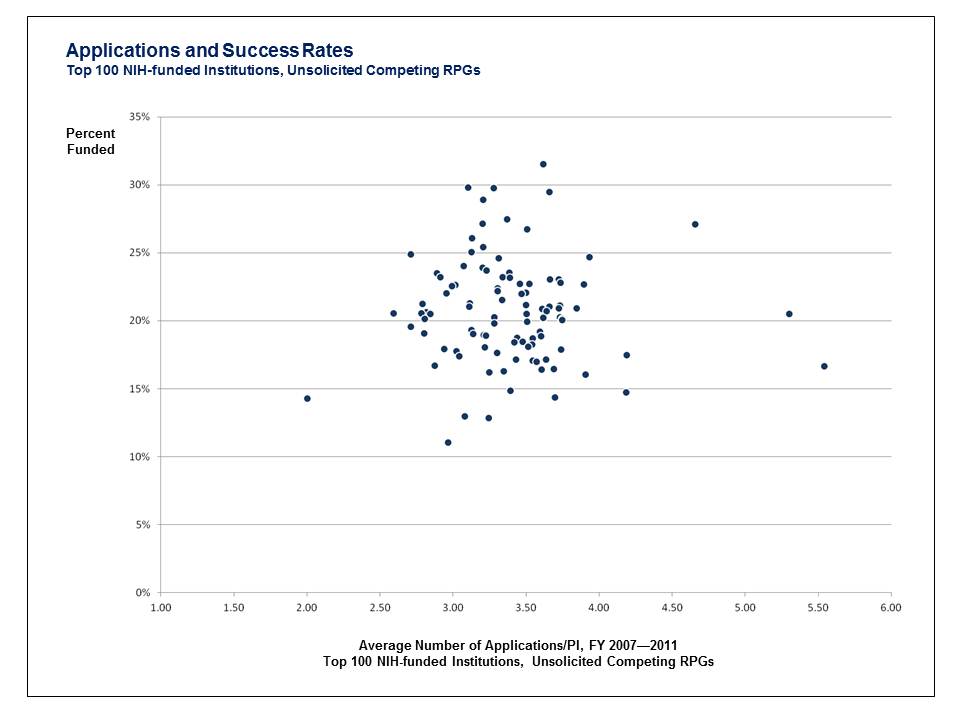

Looking at the average of the entire population of applicants could mask interesting variations in the data. To try to reveal such variations we decided to delve a little deeper into the top 100 NIH-supported institutions using the same data set as in the previous post (i.e., only investigator-initiated competing applications for RPGs, Recovery Act excluded). The figure below shows a scatter plot of the average number of applications per principal investigator (PI) at those institutions over 5 years, from fiscal year 2007 to fiscal year 2011 (each point represents an institution). We used the average number of applications/PI at each institution so that the analysis would not be skewed by differences in the number of investigators at each institution. The first thing you can see is that although the average number of applications per PI is quite variable, it clusters between 2.5—4 applications/PI over the 5 year period.

We also considered the question of what correlation might exist between the number of applications submitted and the percentage of funded applications at each institution. It is evident from the data that, even within our top 100 funded organizations, institutions with similar averages of submitted applications per PI can have very different levels of success. For example, among institutions with an average of 2.9—3.1 applications/PI, anywhere from 11% to 30% of the applications were funded.

Of course, there may be many reasons for this variability in funding rates, such as differences in the types of science conducted at different institutions and the capabilities of the applicants. Another possibility is that that there are differences in the management practices of each institution and how institutions review or vet applications before submission. Whatever the reason, we don’t see a strong correlation between the average number of applications submitted per PI and the percent of funded applications.

Of course, range restriction is a way to obscure a correlation and this is what you have possibly done here.

If this is the number of applications over 5 years (rather than per year) then this population of applicants submits fewer grant applications than the mean, yes? Which suggests that they are more successful….perhaps an obvious conclusion from the restriction to the top 100 grant-getting institutions.

This also brings up the question of whether restricting it to unsolicited applications makes a difference. Do we know that the highest-funded-institute PIs respond to RFAs at the same rate as everyone else? Perhaps part of their success lies in a higher-than-average response to RFAs (which might explain the above, wrt lower than average number of apps/year/PI).

we don’t see a strong correlation between the average number of applications submitted per PI and the percent of funded applications.

In other words, the answer to the title question “Does more applications mean more awards?” is an unequivocal “yes”:

a PI who submits 2.5 awards per year is likely to receive ~0.5 awards.

a PI who submits 5.0 awards per year is likely to receive ~1.0 awards.

correction to my original comment, as the x-axis looks to be over a five year period, not per year:

average number of applications per principal investigator (PI) at those institutions over 5 years

Does the plot show average number of applications/PI for the five-year period from 2007-2011 or the average number of applications/PI PER YEAR over this five-year period?

The plot shows the average number of applications per PI over the 5 year period (not per year).

The average number of applications per applicant on the plot are all below 6.00 and hence correspond to fewer than 6.00 applications/5 years = 1.2 applications per year. Yet in an earlier post, the average number of applications per PI was stated to have increased to 1.5. Is this per year? How does one reconcile that all 100 top funded institutions are well below the stated overall average number of applications per PI?

Can you please explain how an investigator was selected for inclusion? These numbers make no sense unless you are dividing by a lot of people who are not really actively seeking NIH funds across the entire 5 year interval.

The more I look at this post, the more concerned I become. I applaud you for trying to mine the database for information on questions which may help to shape policy going forward. But based on what you present in a blog post, it appears that nobody is really thinking deeply about the questions that need to be answered. This post gives the appearance of being a followup to the observation of the small increase in applications per investigator per year. It gives the appearance of asking if success rate is correlated with the number of applications submitted, which is an excellent question. But then you have decided to base this on individual institution averages which makes very little sense. Applicant institutions tend to have a range of PI characteristics that are of greatest interest- subfields, job category (soft vs hard money, med school vs academic campus, etc), seniority, etc. Also, the selection of application success rate seems bizarre here. Far better to look at per-PI success rate over successive Fiscal Years or even cycles to get an idea of how institutional variation may shape PI behavior. Also to look at the average number of grants per PI- it would be interesting to see if an institution that expected more grants was associated with higher/lower success rates or higher/lower applications per PI.

But really, this should not be an inquiry into applicant institutions. It should focus on categories of PI- new vs experienced, age cohorts, sex and ethnicity, subfields (funding ICs perhaps?), MD vs PhD, etc. As with the above comments, it should also correlate the number of applications (per year) and the individual success rate with funding level of the PI.

The underlying question here appears to be whether PIs are submitting more applications because they need more grants (because the $250K modular award of 10 years ago had the buying power of about $350K today, more or less) or because they need to do this to maintain the same number of grants (because success rates are low). If the mean is skewed because it takes tremendously more applications for newcomers to enter the system but established investigators behavior remains unchanged. Etc.

There are many questions that could conceivably be answered with good inquiry into your database but I fear these one-off graphs will do more damage than good if they are interpreted to mean something that they do not.

mat, there has been a lot of focus on the grant success of the lone PI.

How does the NIH know whether or not an institute’s management is any good. Quite a bit is spent on indirect costs which helps cover the costs of management. What are the ways for the NIH to detect systemic bad management? I believe the institutes showing normal success rates in the graph are a reflection of overall good management and higher quality institute research. For example, hiring a lot of PIs to write grants who may not be competitive in the first place would lower success rates. Or hiring high quality personnel and generally making their lives and work unsatisfying would lower success rates. Or failing to provide proper incentives for collaborative work could lower success rates. Or not having a reliable system for mentoring and vetting grant applications could lower success rates. Turnover of the applicant pool at an institute may also be a relevant indicator of management quality. The NIH needs to be able to manage the managers or at least to evaluate institutes systematically. While the success rate can never be 100%, the 20% of PIs being funded should be highly innovative and productive and that certainly requires good management.

This may be true, but the stated reasons for analyzing these data and posting them on this blogge have absolutely nothing whatsoever to do with what you are talking about.

Comradde,

Well you’re perhaps correct about the stated idea behind the graph. But, when one has quality data one must listen to what it says rather than look for something that is not there. I’m thinking the graph tells us more about the team rather than the individual players. Sometimes a bad coach can be the problem with a team and player performance.

The circle in the center appears to be the average. There are left and right extremes for frequency of submission with equal success rates. On the verticle, we find the potential differences in institute quality. I agree with mat though. If an institute always took high risks (innovative), it may have lower success rates in grant awards. If true, there is a lesson there for the individual PI. Would be nice to see the publication rates or another indicator of productivity/innovation attached to the average success rates in the graph.

Citizen-sci,

All of your gripes about alleged bad-management of a University may affect success rate but it is a leap to infer that therefore the people who do manage to secure an R01 under such conditions will do an inferior job in the future.

One might also point out that a University dean who sets out to be as efficient as possible about extracting money out of the NIH is not necessarily supporting the best, most innovative, science.

Perhaps the Rock Talk blog staff would care to identify the outlying Universities in the plot so that we can perform our introspections about whether this distribution is a meaningful reflection of University quality.

what is the rho (Spearman correlation) on the data ? thanks

Providing a summary of the data is useful and controversial as can be seen from the comments. A true need to the community is the data that is driving the analyses. I would ask NIH to provide the data to the public for its own analyses. To my knowledge awards are provided via Reporter, but application data is not on the web. Limited de-identification would be needed, but uncloaking the information is a necessary evil to sustain the trust.

Why is the nature of getting funded only being discussed in the context of the submitting PI or PI’s institution? What about NIH, its funding priorities, the egotistical, inconsistent reviewers, and the good old boys club? If this sounds cynical–it is, and I’ve actually been funded! The speculation on the meaning of these data is pure nonsense.

I would like to see added to the data above the years NIH increased the post-doc and pre-doc salaries. Institutes usually use these rates (or higher) as a pay-scale even for non-NIH funded students and post-docs. I would like to see this added to the charts. In addition, over the years where a large increase in grant $ applications have occurred there have been large increases in animal costs and while reagents such as antibodies etc have become increasing available and these are not without cost. Therefore given the amount of data it takes to publish a paper these days and the fact that the modular budget cap of 250 has not increased it is not at all surprising that PIs must apply for more grants.

I like the comments on the component of faculty salary covered. Do you have the data that would compare the % of faculty salary covered per PI on a grant during these years?

How do I find the answer to, “what is the average number of R01 submissions prior to first success for investigators not at a top 100 funded school?” (could include resubmissions or asked as independent grant submissions)

Rock Talk Blog Team:

Let me reiterate my earlier question: If the average number of applications per applicant is 1.3-1.5 per year, then the average number of applications over a 5-year period would be 6.5-7.5. Yet all 100 institutions have averages below 6.0 over this period. How can these figures be reconciled?

You really need to address Jeremy’s question, as it appears that something is very wrong with these data.

If calculated from the current blog post, the average per investigator per year will be lower than the average reported in the earlier blog post because the earlier post reported the average for a single year (the total number of applications received in a single year divided by the number of different applicants), whereas the blog post here reports the average over a 5-year period in which not all investigators apply every year. For example, say all investigators were to submit two applications in one year, and only one year, within a five-year period: the average number of applications per PI in any given year would be 2.0 (as calculated in the earlier post), but the average over the 5 years would be 2.00 applications per investigator/5 years = 0.4 applications per investigator per year.

Thanks. In other words, for the calculation of the number of application/PI per year, the denominator is limited to the number of individuals who applied in that year whereas for the calculation of the average number of applications/PI over the five-year period, the denominator includes all individuals who applied at least once over that period.

Based on this, the average number of applications per year for all applicants in this five-year period ranges from 2.0/5 =0.4 to 3.7/5 = 0.74 for this group of institutions. This seems to me to be a more relevant parameter since it reflects the average application activity for all active investigators over a period comparable to the lifetime of a grant.

Dear Sally,

Whether these numbers are accurate are irrelevant. Those of us in the trenches see the reality of the funding crisis. I am rather puzzled by all the “happy talk” in the Rock Talk. NIH funding right now is in a serious crisis. Why isn’t the NIH, you and its Directors screaming to Congress about the dismal funding levels leading to dismal paylines? Layoffs of personnel are happening across the country due to loss of NIH grant funding. Interim paylines at NIAID are at 6% and some other institutes are no better or worse! The system will soon implode at these funding levels and we will lose many investigators particularly at the junior faculty level. As a seasoned investigator I have seen budgets rise and fall, but the future for funding looks poor for the foreseeable future, regardless of the outcome of the election. As scientists we need to be advocates for funding, but we need the NIH to back us, not tell us that everything is rosy. We need the NIH leadership to step up and tell Congress that a disaster is in progress that will further distance us from the rest of the world in science and lead to further job losses and reduced domestic spending. Perhaps those in power do not realize that the grant money we receive is spent primarily on people and supplies, the latter purchased from many American companies. There are long-term consequences that will result from the ongoing crisis that soon will be irreversible. So please, stop with the positive outlook- it doesn’t play in Peoria or anywhere else. Talk to some scientists and ask them what the sentiment is around the country and you will see the reality of the funding crisis.

Thank you for your consideration.

Leo Lefrancois, PhD

Dept of Immunology

UCONN Health Center

Leo-

Some of the unhappy talk posted on this blog shows pretty clearly that the issue is that far too many investigators are crowded around the trough. Perhaps what we really need is for many “seasoned investigators” who have had a good run to get out of the way of those of us who are more junior. Why is it that the elder statesmen types never seem to call for the old guard to shrink their labs and/or retire?

Leo, Drug Monkey has it right! We have screamed, and were heard – 20 years ago a doubling of NIH funding happened. However, why are we now at exactly the point NIH was when I started, around 1992, when 14%ile wasn’t enough to get funded? Because the doubling of the NIH has not been used responsibly by Universities! Now there are many more researhc science buildings (others have written about the boom triggered by the doubling of NIH funding), there are more scientists, they are expected to bring in a higher fraction of their salary, hence a higher fraction of grant $$$ go to faculty salary than 20 years ago, and they are pretty stressed out. Although I am painfully aware of the problem for PIs – new and old alike – more money is not the solution. I refuse to sign these calls for more money. We trained too many scientists, there is too much mediocre science done, and the other tasks that Professors were doing (teaching, medicine) has fallen on the wayside, as tenure is now too much determined by NIH success.

What I think NIH needs to do is follow Bruce Alberts and Francis Collins suggestion from ~2010 to limit PI salary to 50%. This one motion will lead to more responsible behavior by Universities, it will get rid of a lot of faculty or turn them into staff scientists, but also limit the University’s liability in the future, as they have to plan for 50% of a faculty member salary. There are many additional measures that can help – check in which area PhDs still get jobs and fund K-awards and TGs in these areas (e.g. Bioinformatics, Biostatistics PhDs are still needed) and in which area there are too many (wet lab molecular biology?) who can’t find jobs after postdoc. Don’t fund building or infrastructure in new research buildings, don’t fund foreign grants, etc. …