39 Comments

We’re always interested in data, and at NIH we use data to examine the impact of new policies. Among the changes NIH implemented under its Enhancing Peer Review initiative was the assignment of scores to each of five individual criteria for research grant applications: significance, investigator(s), innovation, approach, and environment. The purpose of these criterion scores is to provide additional information to the applicant, but today I am going to use them to show you how we can examine reviewer behavior.

This topic will be familiar to those readers following the NIGMS Feedback Loop. In his latest post on this topic, NIGMS Director Dr. Jeremy Berg presented an analysis conducted by my Division of Information Services. I’d like to elaborate a bit more on this and provide some additional analyses.

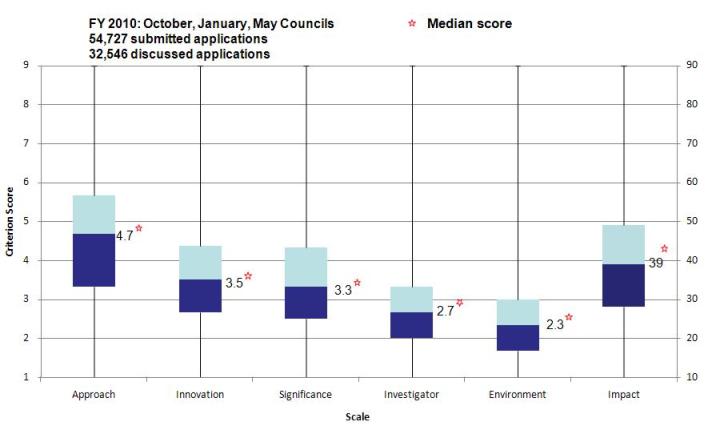

Note that the data presented represents 54,727 research grant applications submitted for funding in fiscal year 2010. Of these, 32,546 applications were discussed and received final overall impact scores.

Below you see distributions of the criterion scores (averaged across reviewers) and the numerical impact scores. (50% of impact scores fall within the colored boxes with 25% below the median in dark blue and 25% above the median in light blue). These distributions reveal differences in the use of the rating scales by reviewers. It appears that reviewers use the full scoring range for all 5 criteria and the impact score (the lines below and above each colored box range from 1 to 9), but you will notice that for the approach criterion reviewers distribute their scores more widely than for the other criteria.

Also, the criterion scores are moderately correlated with each other and with the overall impact score.

| Impact | Approach | Significance | Innovation | Investigator | Environment | |

| Impact | 1 | |||||

| Approach | 0.82 | 1 | ||||

| Significance | 0.69 | 0.66 | 1 | |||

| Innovation | 0.62 | 0.61 | 0.66 | 1 | ||

| Investigator | 0.56 | 0.58 | 0.53 | 0.51 | 1 | |

| Environment | 0.49 | 0.51 | 0.49 | 0.48 | 0.69 | 1 |

As expected, when the criterion scores are subjected to a factor analysis, their inter-correlations result in two factors—one on which significance, innovation, and approach load heavily—accounting for most of the covariance among criterion scores, and a second factor with a smaller portion of covariance involving investigator and environment.

| Criterion | Factor 1 | Factor 2 |

| Significance | 0.75 | 0.40 |

| Innovation | 0.71 | 0.41 |

| Approach | 0.68 | 0.48 |

| Investigator | 0.45 | 0.73 |

| Environment | 0.39 | 0.71 |

For applications receiving numerical impact scores (about 60% of the total), we used multiple regression to create a descriptive model to predict impact scores using the applications’ criterion scores, while attempting to control for ten different “institutional” factors (e.g., whether the application was new, a renewal, or a resubmission). In the model, scores for the approach criterion had the largest regression weight, followed by criterion scores for significance, innovation, investigator, and environment. The same pattern of results was observed across multiple rounds of peer review and institute funding decisions.

| Criterion | Regression Weight |

| Approach | 6.7 |

| Significance | 3.3 |

| Innovation | 1.4 |

| Investigator | 1.3 |

| Environment | -0.1 |

As Jeremy noted in one of his posts, it may be tempting to over interpret such complex data, so we continue to explore their implications and will watch to see if and how these patterns evolve over time. I’m sure we’ll be hearing from you on your interpretations of these data, which will be interesting and informative.

Thanks for diving into the broader dataset!

I don’t recall the NIGMS posts making the range-restriction of Investigator and Environment so clear….possible complication to the correlation with overall impact?

Any chance you could break this down by ESI/NI vs established and by type 1 vs type 2?

The regression really tells the story – solid and stolid continues to win. It is mildly encouraging that “innovative” does not have a negative regression coefficient, but my experience has been that “highly innovative” translates to “too risky, we aren’t going to fund this. I had thought the new scoring system was supposed to give more weight to innovation, but it doesn’t seem to be working out that way. This seems unlikely to be a good recipe to continue to lead the world in scientific discovery and, er, innovation.

Sally, these are really interesting data. As a reviewer for NLM, the regression model weights you presents reflect exactly the order I “weigh” applications. I would not have guessed the spread in those weights would have been as big as those presented on the blog, but the order is spot on. If someone can explain why “Environment” has a negative weight, I would love to hear it!

I would guess Environment has a negative weight because reviewers probably give a good Environment score as some sort of consolation for grants they don’t want to fund; however, good grants also tend to get good Environment scores. Just look, those scores have the lowest median. In my opinion, Environment doesn’t really matter, unless it is a really really bad place (i.e. for an SBIR grant where the company doesn’t have a facility for any research), as a good investigator will get results regardless. That’s probably why most everyone seems to get an “A” for effort for Environment.

Re “If someone can explain why “Environment” has a negative weight, I would love to hear it!”

A. large correlations with other predictors; suppression

B. perhaps it reflects giving less research-experienced or less well-known institutions being given slightly better scores when an application is better than the reviewer would have expected. No slack for well-known investigators/institutions.

As I can see, the Environment criterion may play a role in the performance and quality of proposed research in two ways, hardware and software. The former means with a reputed institutions the equipment and facilities are usually good and conducive for getting quality data; the latter relates to the stringentness of the researchers and their colleagues when thinking and carrying out the proposed research. For instance, in a teaching institution or community colledge the way people think and treat research may be different from major research institutions. To some degree, these differences may play a bigger role than Approach. This is not to discriminate, but since NIH resources get more and more scarce, every aspect needs to be taken into considerations.

Agreed with the CSR effort away from being too emphasized on approach, especially reviewers tend to say too much about approach when they actually do not understand the proposed approach but not the approach itself. In such case, if they want to say anthing, they should only urge the applicant to pay attention to approach X instead of saying approach X is bad. The fact is that if one real expert can get included in the review team of an application, that is already pretty good. Very often, none of the triplex is expert in the proposed area. But they still make many critiques on approach. This is not to do with CSR or NIH’s efforts to improve review practice, but is a common sense that everyone should have learned in elementary school. Less emphasis on Approach may do some good to review fairness to some degree.

I think this indicates clearly that reviewers continue to place weight on approach despite urgings from CSR for the emphasis to be placed on significance. Guidelines for the 12 page applicaiton stipulate that less space should be devoted to approach, opening investigators up to the possibility of being dinged for details that simply cannot be fully described due to space limitations. Reviwers, however, still care about these details, and they care a lot. Seems that the whole point of the new applications and scoring system was to get reviwers to focus on the significance and innovation and to NOT nit-pick the approach. These data show that the goal post has been missed. Either CSR should get a clear message to reviewers about the focus of the new scoring system–and consistently hold all panels to the same standards–or make it clear to investigators that approach DOES indeed carry the bulk of the score, just as it did in the past. I see some inconsistent messages being sent–NIH telling investigators that approach carries less weight, and CSR/reviwers continuing to tout approach as the royal road.

I agree with your comment. Reviewers have a very hard time letting go of the need for details. That said, I think that if CSR feels strongly about helping reviewers shift their thinking away from the details of approach and more toward the significance and innovation, they can play a much stronger role in training reviewers and study section chairs. Reviewers will continue to review as they have been reviewed so change will take time, but only if the CSR strengthens their message on the relative weighting of approach to significance and innovation.

This makes sense. 1) If the approach has problems, the whole proposal is doomed because you proposal something impossible. 2) The approach section is a good target for cheap shot. If the reviewer wants to kill the proposal, he/she can easily find a minor problem in the approach section.

Here’s an alternative view of why approach weighs heavily….

Regardless of who good an idea is, and how significant the area, execution matters. If a PI sees a good idea, but not how to test it rigorously, a good reviewer is going to reflect that in the score. This is very different from “not letting go of the need to see details.” This is a reflection of poor study design, poor use of tools, and experiments with ambiguous goals. It’s also a fair and reasonable criticism of a proposal.

These data suggest that reviewers find that the approach criterion still provides them the most useful information in determining an impact score followed by the significance criterion. This information is important for investigators in future preparation of proposals. Changing behavior is difficult and slow and I think these criteria are moving reviewers in the right direction.

Professor Dubner correctly notes that reviewers continue to find the approach criteria the “most useful”. But most useful in what sense? I would argue that many NIH reviewers approach the review process by looking for reasons to reject. These are the easy, low hanging fruit that can simplify the reviewer’s task by using flaw scanning as the default review strategy. As soon as I see that power calculations are missing, that a scale I don’t like was used, or that the reviewers are presenting some approach that is new and unproven, my work as a reviewer is far simpler. Most reviewers don’t have the time, or expertise to look at applications in a deeper, more sophisticated way, and of course they aren’t being compensated and have too many to review in any other way than the highly efficient (more useful) flaw finding approach. These data show once again what we already know, that the changes put in place have not succeeded in shifting reviewers away from safe, easy, incremental, low risk projects toward rewarding innovative projects that are potentially big impact. Several commenters have said correctly that behavior change is slow and will take time. Our own experience in health behavior change in areas like diet, exercise and smoking should have told us that until the underlying incentives and structural conditions that surround behaviors shift, behavior change is actually quite unlikely. Same is true here. As long as reviewers are chosen who do not have experience getting grants, program personnel who know the agenda of the institutes are silenced during the review, and reviewers are free to take the easy route of flaw-finding with the impunity of anonymity, nothing will change. How about paying reviewers for their time, have them put their names on the reviews they submit, and allow program staff to advocate for innovative projects that reflect scientific goals? Time to consider more structural changes.

While I am pleased that NIH should conduct in-depth statistical analyses of review scores, I wish to add to Thomas Glass’s words of unhappiness and point out that NIH should invest resources in its body of grant reviewers. Fewer formulas, better minds. Reviewers shape science at large and careers. They should have credentials, in fact a pedigree of original scientific achievements and documented intellectual honesty. In the hands of trustworthy individuals anonimity is an instrument of fairness –the appropriate comments will be made irrespective of whether they are addressed to someone they know or do not know–, not an instrument of abuse as is instead in the hands of lesser people. The newer review system with its multiple choice-type approach and dizzying repetitiveness is magnifying the problem. The system no longer requires from anyone the capability to extract, conceptualize, and express an original thought. No one can tell if reviewers have comprehended the proposal, and in fact often they have not. But there is complete impunity in making wrong or false statements. There are many possible solutions. The combination of inviting as reviewers people with credentials, offering the appropriate monetary reward for precious time, and asking for poignant opinions, would be a good start. Taking away the promise of anonimity might also be worth testing. For example, a system could be set up to randomly select reviews that are sent off to applicants with the reviewer names . It is pretty urgent to act.

I continue to review grants where the impact and innovation sections each amount to a single paragraph, while the approach section runs on for the rest of the research plan. This is typical. Having just devoted 3 pages to impact and innovation, I now wonder if this was a mistake. Reviewers are clearly not weighting these sections.

It may also be that reviewers are simply responding to what they receive. A grant that has a single paragraph on innovation probably cannot fairly be judged by putting a large amount of weight on this one little paragraph.

I would like to know whether the weighting of the innovation score in the final score increases with the amount of space that a grant devotes to this section.

These data make absolute sense and point to the only category where reviewers can actually differentiate between applications based on science.

Investigator/Environment are biased towards the low end because these are political death traps. As a reviewer, you never really know who is friends with whom, and harsh (but accurate) criticism of an investigator can easily come back and get you when its your turn to submit. The safest thing is just to say nice things about everybody.

Significance/Innovation are almost as worthless as review criteria, because the fact is that almost all research proposals are focused on some important topic, and all promise amazing things. Again, this is all political hand-waving.

So we are left with the approach. Did the investigator string together a series of logical experiments appropriate to the question at hand? Are there sufficient alternative methods to ensure success in the face of unexpected circumstances? Is it likely that all the promises made in the Significance/Innovation sections can actually be realized? This is the only place where the science itself can be judged.

FINALLY– the voice of reason! You are not only “honest,” you are right! Approach is indeed the criterion that separates the wheat from the chaff. As you say, almost every grant proposal seems to address an over-arching question that is of high significance. And beyond that, who’s to say if it is or isn’t?! The vast majority of significant discoveries– including those with the highest translational success– have been the surprises wrought from serendipity, usually fruits inquiry into simple questions of basic research. It is not until you tease away all the tedious outer ore that you find the gem inside.

I keep waiting for guidance from NIH, or other parties with an interest in elevating the impact of our research, as to how we should discern the nuances of a proposal that evince greater or lesser significance. Shall we trust the applicant’s pleading assertions that the topic is important. Should significance depend (crassly, in the eyes of some) on the sheer number of people affected by the disease-of-the-month to which we must now all tie our proposals? Or should it be based on significance with which one set of experiments (versus the myriad alternatives) could cure said disease? No, wait– that’s actually approach.

Good points. It is also that the importance of your research is directly dependent on what you are going to do. If the experiments themselves are not well-designed, any claimed significance, innovation or impact is irrelevant. It is good that approach continues to be the most important factor.

It is stunning to see NIH grant reveiw processes are so political. So how NIH is to handle this? One way is to separate the Investigator criterion review from the other scriteria so that the science scores are made in the absence of the applicant’s ID. It is surprising this issue has not been a important topic in the NIH review processes reform.

The reviewers seem to put the major emphasis on approach, which is usually not possible to cover in the space allowed. However, this can be well judged from the previous experience of the investigators and their prior publications. Given that, the space allowed is OK. Another major pesisting problem is that most of reviewers are not serious about the clinical relevance of the applications. If the indivisul sections of the project are given different scoring scales, the final impact score may be more in coformity with the intention of NIH to direct its research based on clinical relevance.

Applause to NIH for looking at these data. The data appear to support the view that “environment” should be eliminated from the scored criteria; “environments” don’t do research, and are only relevant in terms of whether there is specialized equipment available to allow the research to proceed, which should properly be part of the approach. These data suggest there is no real impact of the score for “environment” on the success, so it should be dropped as a separate criterion. One could go further and suggest eliminating scoring of “Investigator” because it contributes little to differentiating the scores and relevant aspects are covered in the “approach.”

Has anyone done a similar analysis of SBIR/STTR submitted and discussed proposals?

It would be interesting to see the same analysis for funded and not funded applications. This will tell the real story about what’s important to have an application funded.

It is possible that the effect of significance on overall impact may be moderated approach. If a study is poorly designed, there is little reason to expect that the findings will be important. If it is well designed, the findings may or may not be important; e.g., methodologically sophisticated trivia should get high scores on approach but low scores on significance.

This could be tested by adding an Approach X Significance interaction term to the regression model.

My experience is that the phrase “highly innovative and potentially high impact” is the kiss of death in a review. I agree with Thomas Glass (above) that reviewers mostly seem to be looking for reasons to reject – and, furthermore, there seems to be a “lack of adult supervision” in the review process. No effort seems to be made to reconcile contradictory reviews; in the current funding environment, any negative means no funding, period.

Highly innovative work inherently is going to be riskier and the “approach” is likely to be less concrete and less certain because of this. Such heavy weighting of the “approach” will doom most highly innovative proposals.

Shortening applications has modestly decreased the work of preparing a proposal, which is important. But reviewers expecting the same level of detail in approach tend to counteract this – you have to try to squeeze all the “try to read my mind” minutiae into half the space. So huge amounts of very talented peoples’ time is still wasted on proposals that will never be funded. To come closer to fixing this, I would suggest:

1) change to a letter of intent format. Submit ~3 pages describing the idea. If the agency is not interested, they say so and no more time is wasted. Review will have to focus more on significance and innovation because there isn’t room for excruciating detail on approach – in fact, probably only a paragraph or two should be allowed.

2) when a full proposal is submitted based on a letter, it will have a substantially higher chance of funding. With fewer full proposals to review, reviewers could review more thoughtfully

3) SROs should have training/time/authority to focus the reviewers on what is supposed to matter – more attention to significance and innovation, reconciling disparate reviews

4) if needed, mathematically weight scores for significance and innovation so they have a bigger effect on the final scores (I had been under the apparently erroneous impression this was already supposed to be the case)

In my area of investigation, a correlation of .82 is not moderate; it is very strong. Given the reliability of the variables, when corrected for attenuation, the correlation between Impact and Approach probably approaches 1.o. As well, anything above .60 is surely more impressive than moderate.

I’m puzzled by what seems to be a bit of downplaying of the strength of the relationships.

Given the multiple regression approach used here, I hope this set of findings per se will not ‘drive the field.’ The use of averaged scores is not optimal (although it helps finesse the ‘multi-level’ character of the original dataset), and might qualify as a ‘minor’ or ‘moderate’ weakness that can be addressed in a revision that focuses on the individual reviewer subscores and impact scores. There is some failure to exploit the research opportunity as completely as would be done in the best research on this topic. For example, the exclusion of applications without numerical scores makes the resulting evidence non-representative of the entire review process, and might introduce error in the desired estimates due to exclusion of important informative cases. The optimal model will include estimates for subscore influence on missingness of the numerical impact score, estimated concurrently with the modeling of the numerical impact outcome. (A ‘hurdle’ model comes to mind. ) Be that as it may, even with a conditional analysis that excludes applications without the numerical impact scores, we actually have a multivariate profile of five ordinal subscore response variables, all appreciably intercorrelated with one another (approach, significance, innovation, investigator, environment), which then feed into the ordinal overall impact/priority score, as well as a binary fund/nofund decision variable at a different level of organization (i.e., just one decision per application, whereas there would be k subscores and impact scores per application in an individual level dataset, one for each of the k reviewers. The current multiple regression approach is sub-optimal. If the Division will post the reviewer-level matrices required for regression analyses of the multiple Y and X variables used in the limited single-equation multiple regression analysis, some of us can try to fit alternative multiple-equation and perhaps multi-level models that should yield more definitive evidence on these matters. Individual-level datasets are not necessary, but it would be best to have each reviewer’s subscores, impact score, and fund/nofund decision variables in the matrices, as well as the subscores and a missing impact element in the matrix when the numerical impact score is missing.

I think that these data represent a reasonable model of the application population of interest (i.e., scored applications). I particularly like the way that both mean scores (and their variability) *and* the scores’ interrelationships are analyzed multiple ways. However, lisrelite is right that other considerations would come into play for a more nuanced model of all applications.

Based on the data in the first figure, I also wonder if some of the reduced predictive power of Investigator and Environment might be due to restriction of range issues. It would be nice to have standard errors of each regression coefficient so that the reader can appreciate how much error is associated with them individually.

As a new investigator, this highlights why the “help” that we have supposedly been getting isn’t enough. Because we aren’t as experienced, we need to provide more detail for reviewers to believe we are capable of doing the research. Adding an extra page or two, specifically for new investigators to give the level of detail that they are willing to forgo for more experienced investigators, would be helpful. For every grant I and many of my junior colleagues have submitted, we get marked down on approach (even after our mentors have looked it over) because there isn’t enough detail to convince the reviewers that we know how to implement the research we are proposing. Same grant with a more experienced PI would get a better score.

Well, I think that analysis does not reflect the lack of emphasis NIH places on individual criteria. As a reviewer, we are told “impact score and criteria scores do not need to correlate”. I often give very poor investigator and environment scores to grants when the applicant does a shoddy job with writing those sections, but my overall impact score is usually driven by the science since I usually know things about the environment/investigator not written down in the application. Thus, the lack of correlation between impact score and investigator/environment scores.

If this is true that NIH tells reviewers that “impact score and criteria scores do not need to correlate”, this represents a serious problem because the whole idea behind introducing criteria scores was to communicate weaknesses to the PI. If there is no connection between the impact score and criteria scores, why NIH even changed the format and introduced criteria scores? I would like an explanation.

Of course the key factor is resource of time for the SRAs and the reviewers. The more reviews per reviewer and study section, the lower the quality. This is a classic case of time-accuracy tradeoffs.

A few suggestions:

Reviewers’ scores should be standardized ( z –scores) by reviewer so that no one reviewer has an undue influence on the outcomes.

SRA should be required to rate the reviewers and provide them feedback. Some SRAs are excellent in trying to ensure a fair review process and provide feedback to reviewers. Others let almost anything slide. More than once I have seen reviews sent to the investigators that are highly prejudicial to the study population and are clearly inappropriate. Of course, we have all seen reviews that are just inaccurate.

SRAs should provide study section members with score norms of other study sections so that there is greater consistency between study sections.

in teaching graduate students, I find that it is much easier for them to negatively critique a study than to appreciate it, albeit imperfect, and identify the positive attributes. I’ve also seen a similar dynamic on study sections. When senior and evenhanded investigators begin the discussions and point out the studies strengths as well as weaknesses the study section climate is much more balanced than when less seasoned investigators begin the critiques and only focus on the negative aspects of the study.

When I review, I am most influenced by approach, and solid preliminary data, unless the ideas are really innovative, in which case, that becomes most important. However in those cases, the other 2 major reviewers are far more likely to disagree than when I focus on approach.

Also, considering that most of the scored projects are not actually funded,

Andrei Tkatchenko makes a very important point – IS the analysis the same for funded and not funded applications? You cannot do research on an excellent but not quite funded score!

If these grants are to support innovation in small businesses, I’d like to see a metric of successful innovative research in small business. I’d be delighted to see that metric’s correlation to funded and unfunded grant applications. Perhaps sales volume for the innovative product could be considered a success criterion? Or sale of the small business after market launch of the innovation? Or licensing of the innovation, resulting in sales for another business? Successful grant writing may be a goal for small businesses attempting innovation through research, but may also be an indication that the business is gaining expertise in grantsmanship, at the expense of innovation commercialization.

your data are so impressive!

So, I think you can finally get the weights of five criterions from the result of Regression weights~.

Do you have any plan to adapt these weights on 5 criterions for peer rivew?

If not, I really want to know how you can use these weights to increase the quality of your peer rivew system in reallity?