24 Comments

In December 2018, the NIH Advisory Committee to the Director (ACD) offered a number of recommendations to NIH on the “Next Generation Researchers Initiative.” Among those: The Committee recommended “special funding consideration for “at-risk” investigators. These are researchers who developed meritorious applications who would not have significant NIH research funding if the application under consideration is not awarded. We plan to draw more attention this year, both inside and outside NIH, to outcomes for at-risk investigators, to ensure those submitting meritorious ideas to NIH are not lost to the system.

We agree with the ACD on the need to support these researchers and are identifying strategies to call attention to both early-stage investigators and at-risk investigators who are designated on a meritorious grant application that did not get funded.” Here’, we present some data on our progress towards achieving this goal.”

To answer this question, we will focus on the “funding rate.” This is a person-based metric, as described before, representing the proportion of those scientists who are successful among those applying for an NIH grant.

Funding Rate = (Number of Unique Awardees in Fiscal Year)/(Number of Unique Applicants in Fiscal Year)

We analyzed outcomes for applicants who submitted Type 1 (“de novo”) R01-Equivalent applications in fiscal years 2016, 2017, 2018, and 2019. R01-equivalents follow the expanded definition (2019) which includes activity codes R01, R23, R29, R37, R56, RF1, DP2, DP1, DP5, RL1, and U01, OR activity code R35 with RFA/PA Number GM16-003, GM17-004, PAR17-094, PAR17-190, or HG18-006. Not all activities are in use each fiscal year (FY).

Table 1 shows the number of unique applicants who submitted Type 1 R01-Equivalent applications according to career stage. The career stage categories are early stage investigators (ESIs), other new investigators, at-risk investigators, and established investigators. These categories are meant to be exclusive of each other – in other words, all applicants are classified as being in one, and only one, of these career stage groups.

Table 1: Number of unique Type 1 R01 applicants according to fiscal year and career stage

| Fiscal Year | Early-Stage | Other New | At-Risk | Fully Established |

| 2016 | 4,149 | 5,862 | 7,687 | 7,294 |

| 2017 | 4,286 | 6,148 | 7,629 | 7,889 |

| 2018 | 4,720 | 6,745 | 7,948 | 9,048 |

| 2019 | 4,746 | 7,193 | 7,916 | 9,614 |

Career stages are defined as:

- Early-Stage means no prior support as a Principal Investigator on substantial independent research award and within 10 years of a terminal research degree or end of post-graduate clinical training; A substantial research award is a research grant award excluding smaller grants that maintain ESI status (see here);

- Other New means no prior NIH support as a Principal Investigator on a substantial independent research award, but not considered Early-Stage;

- At-risk means prior support as a Principal Investigator on a substantial independent research award and unless successful in securing a substantial research grant award in the current fiscal year, will have no substantial research grant funding in the following fiscal year; and

- Fully Established (or later just “Established”) means current substantial research support with at least one future year of support irrespective of the results of the current year’s competition(s).

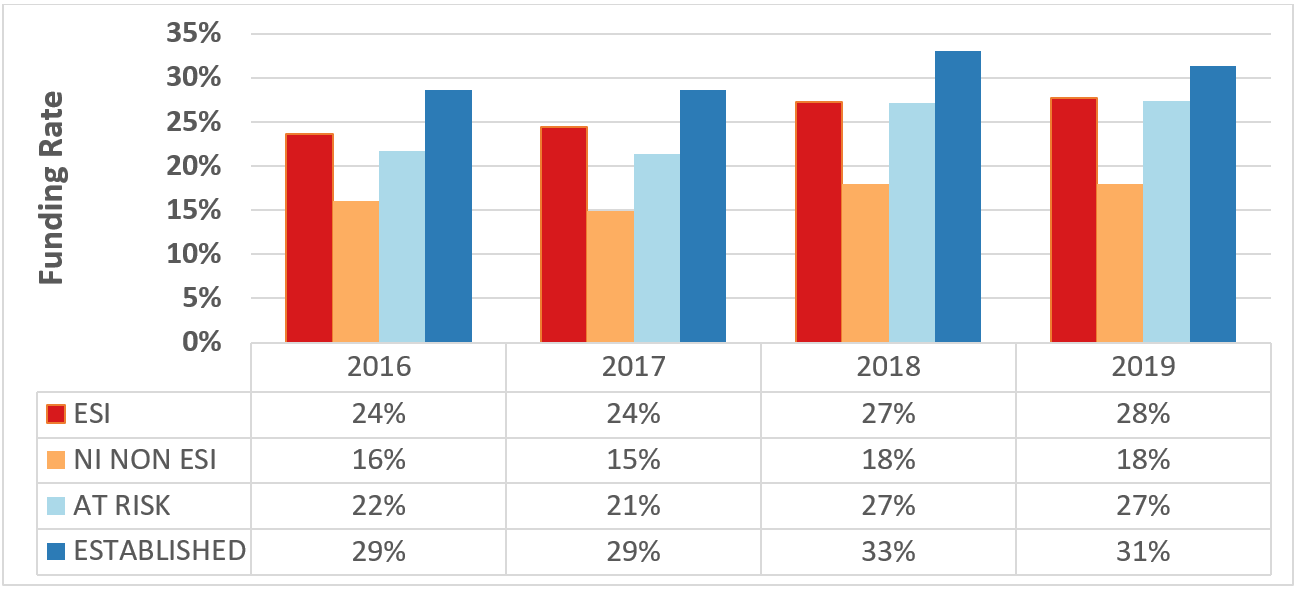

Figure 1 shows our main findings – the funding rate according to career stage. The numbers beneath the X-axis reflect the same data shown by the height of the bars. In 2016 and 2017, the funding rates for at-risk investigators were lower than for early-stage investigators (22% or 21% vs 24%) and much lower than for established investigators (22% or 21% vs 29%). The picture changes in 2018 and 2019. In 2019, the funding rate for at-risk investigators had increased to 27% (almost equivalent to that for early-stage investigators), which was only slightly lower than the funding rate for established investigators (at 31%).

Throughout fiscal years 2016-2019 though, at-risk investigators, for whom the stakes are arguably greater, had lower funding rates than established investigators. Why? Three possible explanations include:

- At-risk investigators submit fewer applications (effectively getting fewer “shots on goal”);

- At-risk investigators are less likely to get an application to the discussion stage in peer review; and/or

- At-risk investigators receive worse priority scores for those applications that make it to discussion in peer review.

Table 2 shows the number of applications submitted by career stage in FY2019. The difference is modest: among at-risk investigators 84% submitted 1 or 2 applications (meaning 16% submitted more than 2), whereas among established investigators 81% submitted 1 or 2 applications (meaning 19% submitted more than 2).

Table 2: Number of applications submitted by career stage in FY 2019

| Number | Early-Stage | Other New | At-Risk | Fully Established |

| 1 | 69% | 73% | 59% | 56% |

| 2 | 22% | 19% | 25% | 25% |

| 3 | 7% | 5% | 10% | 11% |

| 4 or more | 3% | 2% | 7% | 8% |

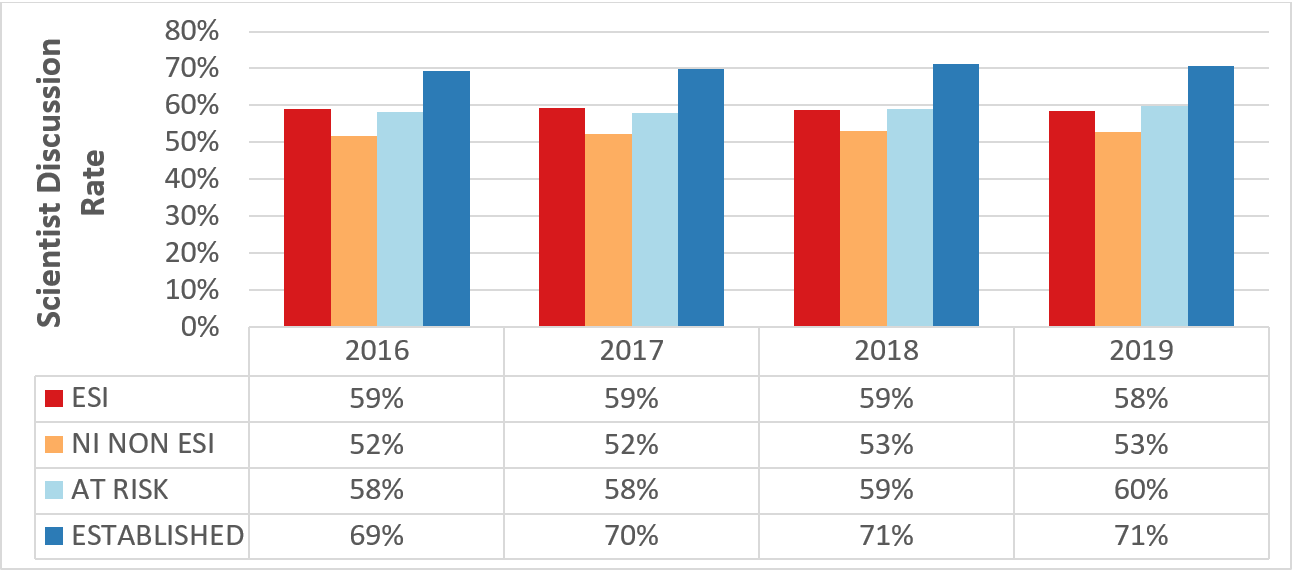

Figure 2 shows the scientist discussion rate which is defined as the percentage of applicants who have at least one application discussed in study section. In every fiscal year, there is at least a 10% difference between at-risk investigators and established investigators. In 2018 and 2019, 59% and 60% of at-risk investigators saw at least one of their applications make it to peer-review discussion compared to 71% of established investigators.

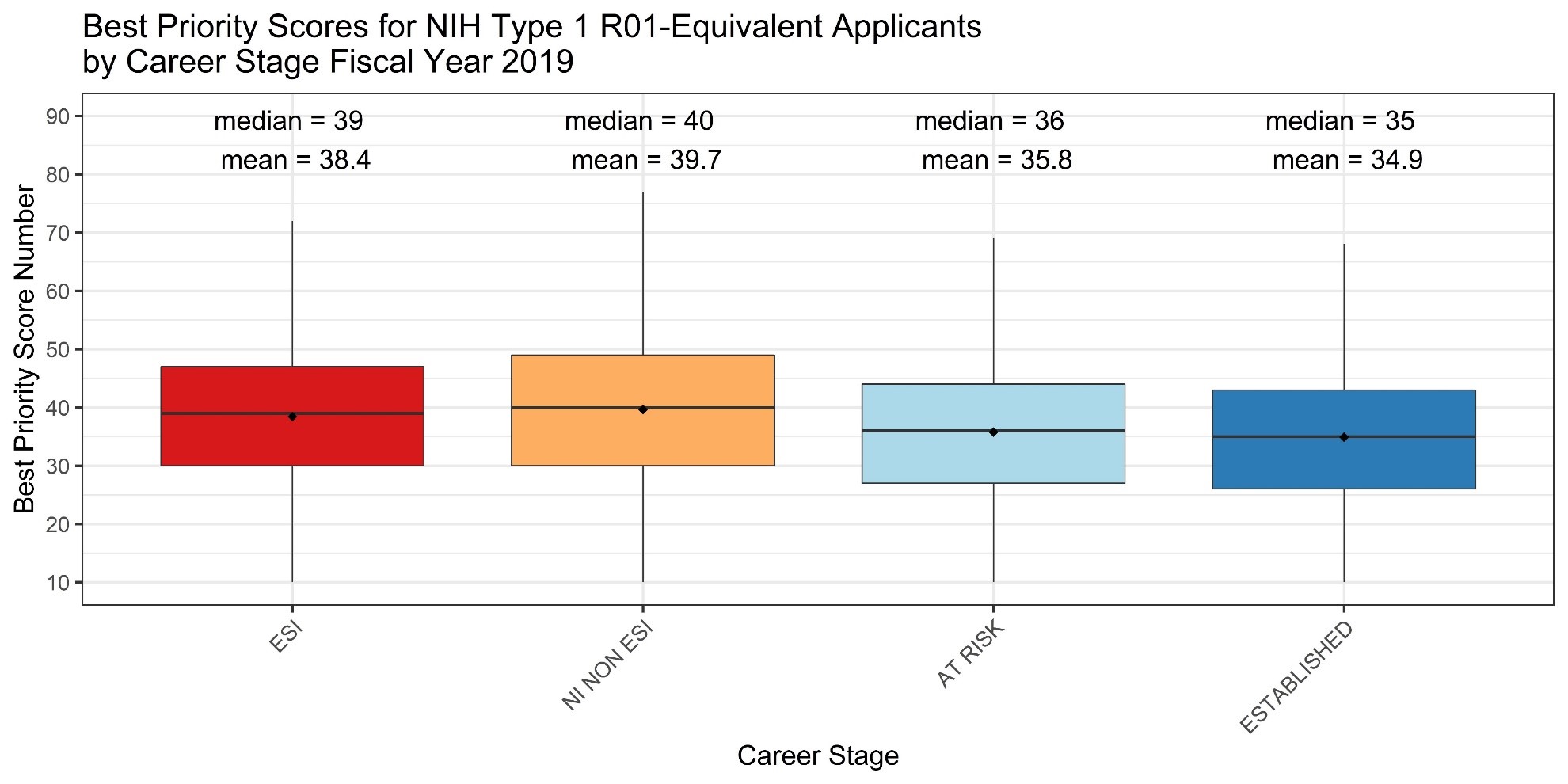

Figure 3 shows box plots of the distributions of best priority scores for those applicants who saw at least one of their applications make it to the discussion phase of peer review. The priority scores for at-risk investigators are only slightly worse than those of established investigators.

In summary, like with early-stage investigators, the picture for at-risk investigators has improved somewhat in 2018 and 2019. Still their funding rates are lower than those for established investigators who are assured of continuing funding for at least one more fiscal year. One possible explanation is that the peer review system is more likely to send applications of established investigators to the discussion phase.

This is an ongoing analysis – there is more to come. But we are seeing both positive and concerning signals regarding this critically important group of the Next Generation Researchers’ Initiative. One promising signal we have heard about that may help this group is bridge funding opportunities being offered by an investigator’s home institution that provide a year of funding if an at risk applicant gets a score that is close to the payline while they continue to submit applications. Through programs such as these, bridge funding from NIH Institutes and Centers (see this post), tracking trends of funding for investigators at all career stages, and monitoring for unintended consequences, we will continue to pay close attention to the types of investigators we are supporting.

I am most grateful to my colleagues in the Division of Statistical Analysis and Reporting for their work on these analyses.

These data are not particularly surprising, since established (well funded) investigators have more resources to produce preliminary data, impress the reviewers with flashy technology and employ ‘shadow writers’ to prepare the applications.

Totally untrue. I never heard of so called shadow grant writers.

I feel devastated for science grant activities. Where is Collins.? Who speaks to Congress?

We are becoming a third works counts u with our low low budget.

Personally I feel that all these metrics on grants and too ha is a waste of time. You all should be lobbying Congress and the Administration for adequate budgets.

I suspect “shadow writers” in this context refers to senior postdocs, RAs and research faculty. These scientists are obviously more common in well funded labs and often shoulder a significant burden in terms of grant preparation, raising both the number and quality of grants submitted by the senior investigator, with little, if any, intellectual credit in return. PIs operating from a single R01 or less are unlikely to have this type of assistance.

I’m disappointed it took so long for the nih to come to this realization. I’ve been “at risk” for 8 years hanging on by collaborations and a supportive chair. It’s a vicious circle….little money, few resources/staff, low productivity, criticized for low productivity on grants, need more preliminary data, spend time generating it only to be informed flippantly that you’ve misinterpreted the reviewers comments. Its an unfair system. I represent a whole generation of mid career researchers who will not have the opportunity to fully develop their programs because of lack of support. Established Pi’s have resources and can be productive. Young Pi’s get “bumps”. Man in the middle gets squeezed. Worst part is there is no recourse.

Fully Agreed…!

I was at risk and with a 24%ile score was encouraged to try again – but then triaged. Now I have lost all NIH funding. It is a hard game – unlike the speaker, I have saved enough and can retire but instead focus on training grants, teaching, admin. There is another topic entirely, whether/how age/stage of career discrimination is legal – part of the problem clearly is that we now have 35% of investigators . When I was a reviewer, I was told sternly that I would not grade down investigators with 5 R01s who are writing another one along the same lines for another 10% – I still voted them down for lack of innovation given the topics of their other 5 grants, and lack of investigator effort available, but the have’s are given always more than the have nots. Here is one reason why we have the dilemma: https://nexus.od.nih.gov/all/wp-content/uploads/2015/03/2.jpg

Thank you! My A0 had a 19th percentile (funding line at 17th%) at NHLBI. Only criticism was formatting. FORMATTING. NOT SCIENCE. NOT HYPOTHETICALLY or TECHNICAL FEASIBILITY, but formatting. When it went back as an A1, I had 3 new reviewers who told me I misinterpreted the comments and gave me a 37%. No I didn’t misinterpret. Nor did my departmental leadership who reviewed my responses to the critiques provided. It is a system gone wrong.

The NIH is ignoring the real “bias” in the system, but everyone is afraid to say what it is due to political pressures and blowback.

The NIH is penny wise and pound foolish. So much $$$$ was invested in my training thru grad school and p/d training. Now I am at the peak of scientific ability with great ideas, questions and approaches, butI can barely keep my head above water because I can’t get 3 misinformed, myopic reviewers to agree simultaneously . Wasted investment.

Completely agree.

I am in a position of having been a post-doc longer than anticipated and was a right hand research scientist for my PI for 10 years. When he passed I was placed on his R01s (he was in his late 50’s and successful). This ruined my New and ESI status for my own R01 applications. So I was put in the category of standard established PI. I found my grant applications were scored on this bias (why didn’t I have senior author publications since I had R01s). It was difficult to explain the situation to the reviewers. I have found my colleagues that grew up in the 22% score funding rate (now 55-70) are very successful at getting grants with writing and preliminary data that is not that different than mine. Several have reviewed mine and thought them good to get scored. It does not help my topic is a “hot topic” with a lot of dogma and competition for the same dollars. I and others have had reviews where the scores are 1-4 for two of the reviewers and 8-9 for one reviewer risking all chance of discussion or funding. There needs to be a policy in place to review such discrepancies in scoring and potentially as a 4th reviewer in to determine if a reviewer was biased. I have seen this type of bias at least 3 times for 3 different colleagues. The CRS panels need to rotate more (do not have standing study section members). For all recipients to participate once in a review panel- do not make it voluntary. I find it disturbing to see the same competitors in review sections over and over. Not helping either is now publishing has changed. European labs are putting out massive articles in Nature and Cell that become the expectation of reviewers of grants. Middle line articles are diffuse due to the massive expansion of “legitimate” online journals. Fewer and fewer “experts” become available to review in 10 days so many of these articles for accuracy.

For myself, I am out of a job in a year. I am funded only by grants, I cannot move (a negative towards people with family, btw, still), and I cannot get the upper hand due to financial limitations and therefore personnel limitations as well. The world will lose an “expert” in a field because the NIH system and my institute and life do not line up for my ability to keep working on research.

Totally agree. Particularly because the current mid-term investigators are those that started their careers during the last recession, when it was way more difficult to get funded than the current ESI.

However, somehow we survived, we keep productive by all means with private and local funds. But that is not enough for the NIH, if we do to have an NIH funding history that means that we do not deserve it? We cannot get into the NIH funding system. Preliminary data and publications are thus generated with limited funding, but that is not considered during the review process. We have struggled enough to be taken into consideration, I believe.

K awardees, clinical scientists and early career investigators are prioritized for funding. This bias has been in place for decades and is breaking a system that should be merit-driven. The data show that very few new treatments have been discovered given all the billions invested. There is also a flawed assumption that the newest technology and trendiest idea should be funded rather than funding solid hypothesis-driven science using established, validated technologies. There is also no reward for publishing negative data so that scientists are prevented from ‘wild goose chase’ projects. To mitigate this systematic failure, I suggest that applications are triaged in a double blind review by overseas, impartial, paid review panels. A second round of unblinded review should be through an internal NIH process. Cronyism and bias needs to be weeded out so that only the best (and not the most expensive) science is funded. Small grants should be available for scientists to write up negative data so that universities don’t reduce their research time in favor of teaching/ clinical duties.

What ever happened to “duel” peer review. Currently “At risk” applicants have no recourse to appeal bogus reviews no mater how much nonsense is spouted in the review process.

How about adding some teeth to NOT-OD-11-064 and use it to eliminate biased reviewers and actually fund deserving applicants?

Currently the NIH rubber stamps complaints pretending they are all made without merit. Few if any grants are awarded to ‘At Risk” applicants under appeal. By “few” I bet this means “none”, but I don’t have access to that data.

I was NIH funded as PI for 25 years and suddenly find myself “at risk” with no funding to prepare preliminary data and upgrade my applications, all of which were discussed with priority scores in the 20% range. I am doing the best, most innovative work of my life and this is heart-breaking. I was told by NIMH program directors that the cutoff is 17% while my most recent proposal received a 21%. The publicly reported funding cap NIMH is 22%! How can this be? My study section reviews are typically 1s and 2s for two reviewers and one outlier with a 4, 6, 7 with un-informed comments–which kills my scorel. There is no normalization nor drop-out of outliers, and no process for remediation of false criticisms.

Something in the system is not working. Last year I submitted 5 NIH grants. But without funds for more data, I don’t know how longer I can go on. My position is at a minority-serving institution, but diversity also does not seem to give me a boost. This is a worrisome situation for productive researchers who received nonsensical criticisms from study sections.

Linda is absolutely correct!

I’ve been an “at risk” investigator many times, so I’m pleased that you are giving this problem attention. The underlying problem in my case is the ‘tyranny of the specific aims’. It’s easy to fill out research details/specific aims of well-established problems with well-developed methods. But, if one is working at the bleeding edge where the next step is not always clear, study sections tend not to give fundable priority scores. A friend of mine once said there are two kinds of scientists, map makers and explorers. Study sections prefer map makers.

The funding is biased to animal models because the results are “more reproducible” but patients are not reproducible, so what is the SIGNIFICANCE?

Maybe if NIH had requested three decades ago that Institution trustee contribute to sustain faculty that are at risk based on some agreed metrics (they do greatly appreciate the overhead that is provided with the NIH grants !!) there will be less at risk situations. In fact, that is what the current Director suggested when he was appointed but nothing was done. The statistical games shown are not helpful with the heart of the matter; Institutions need to be cajoled by NIH to provide these bridge funds.

Not surprising. I used to have 4 R01s at one point and funding seemed to flow, there was a sense that our work was respected by default. Now that I only have one R01 in NCE, all the study sections spit at my grants with contempt and no preliminary data is enough. Peer review has a “wolf pack”, irrational cruelty to it. One of the reasons the system is unfair is that the reviewee feels completely defenseless, and as Mike Lauer points out, it introduces biases. A possible solution to this problem would be to introduce technology to allow real-time (one-way) answer to the study section, i.e. as soon as the reviews are available, the reviewee would be able to introduce a one-page rebuttal (for the study section to discuss) to clear some of the misunderstandings and biases without having to wait another cycle — that would be a fair way by which the reviewee could rationally defend him/herself.

I’m intrigued by this and support it. Without a way to respond to reviewers’ comments in real-time, one has to wait until the next submission cycle. Very likely, the next set of reviewers comments will either: 1) have nothing to do with the initial comments, or worse yet 2) refute the initial comments, and thus all the changes made since the previous submission will be invalidated. It is an irrational model.

I believe the internal NIH funding method actually does this. It is a two day process and the second is rebuttal, I believe. So internal members get a benefit outside researchers do not get.

I agree, being able to respond to criticisms in real time would be really helpful (and prevent the wasted time of writing and rewriting applications in successive rounds of submission). In the UK this is done by interview of the applicant with the review panel after the grant is shortlisted.

Very nice analysis – thank you for shining the light on this vulnerable group and their relevance to the biomedical workforce. Institutional bridge funding for applicants who score highly but do not get funded on initial submission is a worthwhile investment which our institution (MGH) has implemented to great success. It is reassuring to see a modest upward trend in the funding rate of at-risk investigators after the rollout of the Next Generation Researchers’ Initiative.

There should be mechanism for NIH bridge funding, if you are at risk. For instance, if a PI has consistently published in respected journals and ends up without funding, s/he should be able to get, say $20K or $30K per year (say, up to 3 years) to fund at least a grad student plus supplies to keep a lab afloat, research going, and skills alive. That would be a small investment for NIH with potentially big impact.

I am saying this as a PI without current funding, after having had 2 R01s over the past 10 years that produced a total of ~80 papers and consistently ~1000 citations per year (including older papers, obviously). Once I ran out of funding, my department head swiftly took away my lab and gave it someone else after about 3 months. As a tenured professor I won’t have trouble keeping myself busy via collaborations etc. but it will be difficult to establish a lab again, even with new funding, any time soon.

I am reading these comments with great concern for individuals who are trying to enter the research work force. As one of the comments pointed out a single reviewer can place scores outside of the funding range for an investigator (and the NIH Program officials who have to justify “skips” in funding order) and only through advocacy by internal NIH Programs can get support for specific applications if funds are available. Investing in new areas of research is critical particularly by young investigators who for are making a path for themselves (after years of training). Reviewers are often unsympathetic or do not accurately reflect initiatives to engaging these individuals in activities that transition them from post-doctoral status to early stage researchers. Many of these promising individuals are lost to continuing research careers at this point. I hope that this system of collaboration between Review and Program can be adjusted where innovative platforms can be developed tp continue support.

I think one of the best strategies is to provide an R21 funding mechanism that is used towards getting preliminary data for an R01. This would bridge potential loss in funding that would occur when a major award is loss. Once you lose funding, the reviewers look at this poorly, and i think that can drive the scores down when reviewing R01s. We are not supposed to consider that an investigator is already funded by multiple R01s, but there is never a message that one should ignore whether there is lack of current NIH funding.